Summary

In this investigation, three solutions were analyzed: MySQL with MHA (Master High Availability Manager for MySQL), MySQL with Galera Replication (synchronous data replication cross-node), and AWS RDS-Aurora (data solution from Amazon promising HA and read scalability).

These three platforms were evaluated for high availability (HA; how fast the service would recover in case of a crash) and performance in managing both incoming writes and concurrent read and write traffic.

These were the primary items evaluated, followed by an investigation into how to implement a multi-region HA solution, as requested by the customer. This evaluation will be used to assist the customer in selecting the most suitable HA solution for their applications.

HA tests

MySQL + Galera was proven to be the most efficient solution; in the presence of read and write load, it performed a full failover in 15 seconds compared to 50 seconds for AWS-Aurora, and to more than two minutes for MySQL with MHA.

Performance

Tests indicated that MySQL with MHA is the most efficient platform. With this solution, it is possible to manage read/write operations almost twice as fast as and more efficiently than MySQL Galera, which places second. Aurora consistently places last.

Recommendations

In light of the above tests, the recommendations consider different factors to answer the question, "Which is the best tool for the job?" If HA and very low failover time are the major factors, MySQL with Galera is the right choice. If the focus is on performance and the business can afford several minutes of down time, then the choice is MySQL with MHA.

Finally, Aurora is recommended when there is a need for an environment with limited concurrent writes, the need to have significant scale in reads, and the need to scale (in/out) to cover bursts of read requests such as in a high-selling season.

{autotoc enabled=yes}

Why This Investigation?

The outcomes presented in this document are the result of an investigation taken in September 2015. The research and tests were conducted as an answer to a growing number of requests for clarification and recommendations around Aurora and EC2. The main objective was to be able to focus on real numbers and scenarios seen in production environments.

Everything possible was done to execute the tests in a consistent way across the different platforms.

Errors were prune by errors executing each test on each platform before collecting the data, this run also had the scope to identify the saturation limit that was different for each tested architecture. During the real test execution, I had repeated the test execution several times to identify and reduce any possible deviation.

Things to Answer:

In the investigation I was looking to answer to the following things by platform

- HA

- Time to failover

- Service interruption time

- Lag in execution at saturation level

- Ingest & Compliance test

- Execution time

- Inserts/sec

- Selects/sec

- Deletes/sec

- Handlers/sec

- Rows inserted/sec

- Rows select/sec (compliance only)

- Rows delete/sec (compliance only)

Brief description about MHA, Galera, Aurora

MHA

MHA is a solution that sits on top of the MySQL nodes, checking the status of each the nodes, and using custom scripts to manage the failover. An important thing to keep in mind is that MHA is not acting as the “man in the middle”, and as such, no connection is sent to the MHA Controller. The MHA controller instead could manage the entry point with a VIP (Virtual IP), as HAProxy settings, or whatever makes sense in the design architecture.

At the MySQL level, the MHA controller will recognize the failing master and will elect the most up-to-date one as the new master. It will also try to re-align the gap between the failed master and the new one using original binary logs if available and accessible.

Scalability is provided via standard MySQL design, having one master in read/write and several servers in read mode. Replication is asynchronous replication, and therefore it could easily lag, leaving the read nodes quite far behind the master.

MySQL with Galera Replication

MySQL + Galera works on the concept of a cluster of nodes sharing the same dataset.

What this means is that MySQL+Galera is a cluster of MySQL instances, normally three or five, that share the same dataset, and where data is synchronously distributed between nodes.

The focus is on the data not on the nodes given to each node sharing the same data and status. Transactions are distributed across all active nodes that represent the primary component. Nodes can leave and re-join the cluster, modifying the conceptual view that expresses the primary component (for more details on Galera review my presentation ).

What is of note here, is that each node has the same data at the end of a transaction; given that the application can connect to any node and read/write data in a consistent way.

As previously mentioned replication is (virtually) synchronous, data is locally validated and certified, and conflicts are managed to keep data internally consistent. Failover is more of an external need than a Galera one, meaning that the application can be set to connect to one node only or on all the available nodes, and if one of the nodes is not available the application should be able to utilize the others.

Given that not all applications have this function out of the box, it is common practice to add another layer with HAProxy to manage the application connection, and have HAProxy distribute the connection across nodes or to use a single node as main point of reference and then shift to the others in case of needs. The shift in this case is limited to move the connection points from Node A to Node B.

MySQL/Galera write scalability is limited by the capacity to manage the total amount of incoming writes by the single node. There is no write scaling in adding nodes. MySQL/Galera is simply a write distribution platform, and reads can be performed consistently on each node.

Aurora

Aurora is based on the RDS approach with the ease of management, built-in backup, data on disk autorecovery etc. Amazon also states that Aurora offers a better replication mechanism (~100 ms lag).

This architecture is based on a main node acting as a read/write node and a variable number of nodes that can work as read-only nodes. Given that the replication is claimed to be within ~100ms, reading from the replica nodes should be safe and effective. A read replica can be distributed by AZ (availability zone), but must reside in the same region.

Applications connect to an entry point that will not change. In case of failover, the internal mechanism will move the entry point from the failed node to the new master, and also all the read replicas will be aligned to the new master.

Data is replicated at a low level, and pushed directly from the read/write data store to a read data store, and here there should be a limited delay and very limited lag (~100ms). Aurora replication is not synchronous, and given only one node is active at time, there is no data consistency validation or check.

In terms of scalability, Aurora is not write scaling by adding of the replica nodes. The only way to scale the writes is to scale up, meaning upgrading the master instance to a more powerful one. Given the nature of the cluster, it would be unwise to upgrade only the master.

Reads are scaled by adding new read replicas.

What about multi-region?

One of the increasing requests I receive is to design architectures that could manage failover across regions.

There are several issues in replicating data across regions, starting from data security down to packet size and frame dimension modification, given we must use existing networks for that.

For this investigation, it is important to note one thing: the only solution that could offer internal replication cross-region, is Galera with the use of Segments. But given the possible issues I normally do not recommend using this solution when we talk of regions across continents. Galera is also the only one that eventually would help optimize asynchronous replication, using multiple nodes to replicate on another cluster.

Aurora and standard MySQL must rely on basic asynchronous replication, with all the related limitations.

Architecture for the investigation

The investigation I conducted used several components:

- EIP = 1

- VPC = 1

- ELB=1

- Subnets = 4 (1 public, 3 private)

- HAProxy = 6

- MHA Monitor (micro ec2) = 1

- NAT Instance (EC2) =1 (hosting EIP)

- DB Instances (EC2) = 6 + (2 stand by) (m4.xlarge)

- Application Instances (EC2) = 6

- EBS SSD 3000 PIOS

- Aurora RDS node = 3 (db.r3.xlarge)

- Snapshots = (at regular intervals)

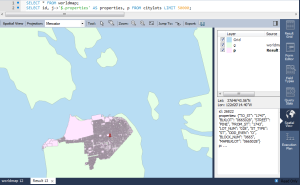

Application nodes connect to the databases either using an Aurora entry point, or HAProxy. For MHA, the controller action was modified to act on an HAProxy configuration instead on a VIP (Virtual IP), modifying his active node and reloading the configuration. For Galera, the shift was driven by the recognition of the node status using the HAProxy check functionality.

As indicated in the above schema, replication was distributed across several AZ. No traffic was allowed to reach the databases from outside the VPC. ELB was connected to the HAProxy instances, which have proven to be a good way to rotate across several HAProxy instances, in case an additional HA layer is needed.

For the scope of the investigation, given each application was hosting a local HAProxy, using the “by tile” approach ELB was tested in the phase dedicated to identify errors and saturation, but not used in the following performance and HA tests. The NAT instance was configured to allow access to each HAProxy web interface only, to review statistics and node status.

Tests

Description:

I performed 3 different types of tests:

- High availability

- Data ingest

- Data compliance

High Availability

Test description:

The tests were quite simple; I was running a script that, while inserting, was collecting the time of the command execution, storing the SQL execution time (with NOW()), returning the value to be printed, and finally collecting the error code from the MySQL command.

The result was:

Output

2015-09-30 21:12:49 2015-09-30 21:12:49 0

/\ /\ /\

| | |

Date time sys now() MySQL exit error code (bash)Log from bash :

2015-09-30 21:12:49 2015-09-30 21:12:49 0

Log from bash :

2015-09-30 21:12:49 2015-09-30 21:12:49 0

Inside the table:

CREATE TABLE `ha` ( `a` int(11) NOT NULL AUTO_INCREMENT, `d` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP, `c` datetime NOT NULL; PRIMARY KEY (`a`) ) ENGINE=InnoDB AUTO_INCREMENT=178 DEFAULT CHARSET=utf8 COLLATE=utf8_unicode_ciselect a,d from ha; +-----+---------------------+ | a | d | +-----+---------------------+ | 1 | 2015-09-30 21:12:31 |

For Galera, the HAProxy settings were:

server node1 10.0.2.40:3306 check port 3311 inter 3000 rise 1 fall 2 weight 50 server node2Bk 10.0.1.41:3306 check port 3311 inter 3000 rise 1 fall 2 weight 50 backup server node3Bk 10.0.1.42:3306 check port 3311 inter 3000 rise 1 fall 2 weight 10 backup

I ran the test script on the different platforms, without heavy load, and then close to the MySQL/Aurora saturation point.

High Availability Results

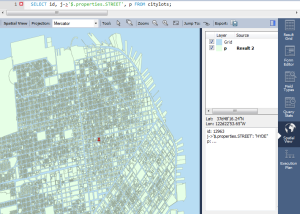

I think an image is worth millions of words.

MySQL with MHA was taking around 2 minutes to perform the full failover, meaning from interruption to when the new node was able to receive data again.

Under stress, the master was so ahead of the slaves, and replication lag was so significant, that a failover with binlog application was just taking too long to be considered a valid option in comparison to the other two. This result was not a surprise, but had pushed me to analyze the MHA solution more independently given the behaviour was totally diverging from the other two such that it was not comparable.

More interesting was the behavior between MySQL/Galera and Aurora. In this case, MySQL/Galera was consistently more efficient than Aurora, with or without load. It is worth mentioning that of the 8 seconds taken by MySQL/Galera to perform the failover, 6 were due to the HAProxy settings which had 3000 ms interval and 2 loops in the settings before executing failover. Aurora was able to perform a decent failover when the load was low, while under increasing load, the read nodes become less aligned with the write node, and as such less ready for failover.

Note that I was performing the tests following the Amazon indication to use the following to simulate a real crash:

ALTER SYSTEM CRASH [INSTANCE|NODE]

As such, I was not doing anything strange or out of the ordinary.

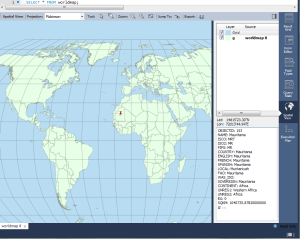

Also, while doing the tests, I had the opportunity to observe the Aurora replica lag using CloudWatch, which reported a much higher lag value than the claimed ~100 ms.

As you can see below:

In this case, I was getting almost 18 seconds of lag in the Aurora replication, much higher than ~100 ms!

Or a not clear

As you can calculate by yourself, 2E16 is several decades of latency.

Another interesting metric I was collecting was the latency between the application sending the request and the moment of execution.

Once more, MySQL/Galera is able to manage the requests more efficiently. With a high load and almost at saturation level, MySQL/Galera was taking 61 seconds to serve the request, while Aurora was taking 204 seconds for the same operation.

This indicates how high the impact of load can be in case of saturation, for the response time and execution. This is a very important metric to keep under observation to decide when or if to scale up.

Exclude What is Not a Full Fit

As previously mentioned, this investigation was intended to answer several questions first of all about the HA and the failover time. Given that, I had to exclude the MySQL/MHA solution from the remaining analysis, because it is so drastically divergent that it will make no sense to compare, also analyzing the performance of the MHA MySQL solution in conjunction of the others, would had flattered the other two. Details about MHA/MySQL are present in the Annex.

Performance tests

Ingest tests

Description:

This set of tests were done to cover how the two platforms behaved in case of a significant amount of inserts.

I used IIbench with a single table and my own StressTool that instead uses several tables (configurable) plus other more configurable options like:

- Configurable batch inserts

- Configurable insert rate

- Different access method to PK (simple PK or composite)

- Multiple tables and configurable table structure.

The two benchmarking tools differ also in the table definition:

IIBench

CREATE TABLE `purchases_index` ( `transactionid` bigint(20) unsigned NOT NULL AUTO_INCREMENT, `dateandtime` datetime DEFAULT NULL, `cashregisterid` int(11) NOT NULL, `customerid` int(11) NOT NULL, `productid` int(11) NOT NULL, `price` float NOT NULL, `data` varchar(4000) COLLATE utf8_unicode_ci DEFAULT NULL, PRIMARY KEY (`transactionid`), KEY `marketsegment` (`price`,`customerid`), KEY `registersegment` (`cashregisterid`,`price`,`customerid`), KEY `pdc` (`price`,`dateandtime`,`customerid`) ) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8 COLLATE=utf8_unicode_ci

StressTool

CREATE TABLE `tbtest1` ( `autoInc` bigint(11) NOT NULL AUTO_INCREMENT, `a` int(11) NOT NULL, `uuid` char(36) COLLATE utf8_unicode_ci NOT NULL, `b` varchar(100) COLLATE utf8_unicode_ci NOT NULL, `c` char(200) COLLATE utf8_unicode_ci NOT NULL, `counter` bigint(20) DEFAULT NULL, `time` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP, `partitionid` int(11) NOT NULL DEFAULT '0', `date` date NOT NULL, `strrecordtype` char(3) COLLATE utf8_unicode_ci DEFAULT NULL, PRIMARY KEY (`autoInc`,`date`), KEY `IDX_a` (`a`), KEY `IDX_date` (`date`), KEY `IDX_uuid` (`uuid`) ) ENGINE=InnoDB AUTO_INCREMENT=1 CHARSET=utf8 COLLATE=utf8_unicode_ci

IIbench was executed using 32 threads for each application server, while the stress tool was executed running 16/32/64 on each Application node, resulting in 96, 192, and 384 threads, each of which executing the batch insert with 50 insert per batch (19,200 rows).

Ingest Test Results

IIBench

Execution time:

Time to insert ~305 ML rows, in one single table using 192 threads.

Rows Inserted/Sec

Insert Time

The result of this test is once more quite clear, with MySQL/Galera able to manage the load in 1/3 of the time Aurora takes. MySQL/Galera was also more consistent in insert time taken with the growth of the rows number inside the table.

It is also of interest to note how the number of rows inserted reduced faster in MySQL/Galera related to Aurora.

Java Stress Tool

Execution Time

This test was focused on multiple inserts (as IIbench) but using an increasing number of threads, and multiple tables. This test is closer to what could happen in real life given parallelization, and multiple entry points are definitely more common than a single table insert in a relational database.

In this test Aurora performs better and the distance is less evident than the IIbench test.

In this test, we can see that Aurora is able to perform almost as a standard MySQL instance, but this performance does not persist with the increase of concurrent threads. Actually, MySQL/Galera was able to manage the load of 384 threads in 1/3 of the time in respect to Aurora.

Analyzing more in-depth, we can see that MySQL/Galera is able to manage more efficiently the commit phase part, which is surprising keeping in mind MySQL/Galera is using synchronous replication, and it had to manage the data validation and replication.

Row inserted

Com Commit

Commit Handler Calls

In conclusion, I can say that also in this case MySQL/Galera performed better than Aurora.

Compliance Tests

The compliance tests I ran were using Tpcc-mysql with 200 warehouses, and StressTool with 15 parent tables and 15 child tables generating Select/Insert/Delete on a basic dataset of 100K entries.

All tests were done with the buffer pool saturated.

Tests for tpcc-mysql were using 32, 64, and 128 threads, while for StressTool I was using 16, 32, and 64 threads (multiply for the 3 application machines).

Compliance Tests Results

Java Stress Tool

Execution Time

In this test, we have the applications performing concurrent access and action in read/write rows on several tables. It is quite clear from the picture above that MySQL/Galera was able to process the load more efficiently than Aurora. Both platforms had a significant increase in the execution time with the increase of the concurrent threads.

Both platforms reach saturation level with this test, using a total of 192 concurrent threads. Saturation was at different moment and hitting a different resource during the 192 concurrent threads test; in the case of Aurora it was CPU-bound and there was replication lag; for MySQL/Galera the bottlenecks were i/o and flow control.

Rows Insert and Read

In relation to the rows managed, MySQL/Galera performed better in terms of quantity of rows managed, but this trend went significantly down, while increasing the concurrency. Both read and write operations were affected, while Aurora was managing less in terms of volume, but became more consistent while concurrency increased.

Com Select Insert Delete

Analyzing the details by type of command, it is possible to identify that MySQL/Galera was more affected in the read operations, while writes showed less variation.

Handlers Calls

In write operation Aurora was inserting a significantly less volume of rows, but it was more consistent with concurrency increase. This is probably because the load exceed the capacity of the platform, and Aurora was acting at its limit. MySQL/Galera was instead able to manage the load with 2 times the performance of Aurora, also if the increasing concurrency was affecting negatively the trend.

TPCC-mysql

Transactions

The Tpcc-mysql is emulating the CRUD activities of transaction users against N warehouses. In this test, I used 200 warehouses; each warehouse has 10 terminals, with 32, 64, and 128 threads.

Once more, MySQL/Galera is consistently better than Aurora in terms of volume of transactions. Also the average per-second results were consistently over almost 2.6 times better than Aurora. On the other hand, Aurora shows less fluctuation in serving the transactions, having a more consistent trend for each execution.

Average Transactions

Conclusions

High Availability

MHA excluded, the other two platforms were shown to be able to manage the failover operation in a limited time frame (below 1 minute); nevertheless MySQL/Galera was shown to be more efficient and consistent, especially in consideration of the unexpected and sometime not fully justified episodes of Aurora replication lags. This result is a direct consequence of the synchronous replication, that by design brings MySQL/Galera in to not allow an active node to fell behind.

In my opinion the replication method used in Aurora, is efficient, but it still allows node misalignments, which is obviously not optimal when there is the need to promote a read only node to become a read/write instance.

Performance

MySQL/Galera was able to outperform Aurora in all tests -- by execution time, number of transactions, and volumes of rows managed. Also, scaling up the Aurora instance did not have the impact I was expecting. Actually it was still not able to match the EC2 MySQL/Galera performance, with less memory and CPUs.

Note that while I had to exclude the MHA solution because of the failover time, the performance achieved using standard MySQL were by far better than MySQL/Galera or Aurora, please see Appendix 1.

General Comment on Aurora

The Aurora failover mechanism is not so much different as other solutions. In case of a crash of a node another node will be elected as new primary node, on the basis of the "most up-to-date" rule.

Replication is not illustrated clearly in the documentation, but it seems to be a combination of block device distribution and semi-sync replication, meaning that a primary is not really affected by the possible delay in the copy of a block once its dispatched. What is also interesting is the way the data of a volume will be fixed in case of issues that will happen copying the data over from another location or volume that hosts the correct data. This resembles the HDFS mechanism, and may well be exactly it; what is relevant is that if HDFS, the latency in the operation may be relevant. What is also relevant in this scenario is the fact that if the primary node crashes, during the election of a secondary to primary, there will be service interruption; this can be up to 2 minutes according to documentation and verified in the tests.

About replication, it is stated in the documentation that the replication can take ~100 ms, but that is not guaranteed and is dependent on the level of traffic in writes incoming to the primary server. I have already reported that this is not true, and replication can take significantly longer.

What happened during the investigation is that, the more writes the more possible distance could exists between the data visible in the primary and the one visible in the replica (replication lag). No matter how efficient the replication mechanism is, this is not synchronous replication, and this does not guarantee consistent data reading by transaction.

Finally, replication across regions is not even in the design of the Aurora solution, and it must rely on standard replication between servers as with asynchronous MySQL replication. Aurora is nothing more, nothing less than an RDS with steroids, and with smarter replication.

Aurora is not performing any kind of scaling in writes, scaling is performed in reads. The way it scales in write is by scaling up the box, so more power and memory = more writes, nothing new and obviously scaling up is also more cost. That said, I think Aurora is a very valuable solution when in need to have a platform that requires extensive read scaling (in/out), and for rolling out a product in phase 1.

Appendix MHA

MHA performance Graphs

As previously mention MySQL/MHA was acting significantly better the other two solutions. IIBench test complete in 281 seconds against the 4039 of Galera.

IIBench Execution Time

![MHA_exec_time]()

MySQL/MHA execution time (Ingest & Compliance)

The execution of the Ingest and compliance test in MySQL/MHA had been 3 time faster than MySQL/Galera.

MySQL/MHA Rows Inserted (Ingest & Compliance)

The number of inserted rows is consistent with the execution time, being 3 times the one allowed in the MySQL/Galera solution. MySQL/MHA was also able to better manage the increasing concurrency with simple inserts or in the case of concurrent read/write operations.

PlanetMySQL Voting: Vote UP / Vote DOWN