How to Install Magento 2 with Nginx and Letsencrypt on Ubuntu 18.04

How to drop a column in mysql table

In this 101 article, I will show how to drop/remove a column from a table in MySQL.

In this article I will use an example table:

CREATE TABLE tb( c1 INT PRIMARY KEY, c2 char(1), c3 varchar(2) ) ENGINE=InnoDB;

To remove a column we will make use of ALTER TABLE command:

ALTER TABLE tb DROP COLUMN c2;

The command allows you to remove multiple columns at once:

ALTER TABLE tb DROP COLUMN c2, DROP COLUMN c3;

If you are running MySQL 5.6 onwards, you can make this operation online, allowing other sessions to read and write to your table while the operation is been performed:

ALTER TABLE tb DROP COLUMN c2, ALGORITHM=INPLACE, LOCK=NONE;

Reference:

https://dev.mysql.com/doc/refman/5.7/en/alter-table.html

https://dev.mysql.com/doc/refman/5.7/en/innodb-create-index-overview.html

Understanding ProxySQL

In this post, I will cover a bit of ProxySQL. ProxySQL is a proxy which implements MySQL protocol, allowing it to do things that other proxies cannot do. ProxySQL is gaining a lot of traction nowadays and it’s capable to integrate with a variety of products from MySQL ecosystems, such as replication (master – slave / master – master), Percona XtraDB Cluster and Group Replication.

One of its many functionalities (which IMHO makes it awesome) is the ability to do read/write split seamless to the application. You can start sending reads to your slave without doing a single line of code.

In this article, I will cover a few important points to understand how it works.

Instalation

The instalation is easy, you can do it by downloading the corresponding package for your OS from its official github repo https://github.com/sysown/proxysql/releases

sudo yum install https://github.com/sysown/proxysql/releases/download/v1.4.9/proxysql-1.4.9-1-centos7.x86_64.rpm

Now we just need to start the service

sudo service proxysql start

Interfaces

ProxySQL splits application interface from the admin interface. It will listen on 2 network ports. Admin will be on 6032 and application will listen on 6033 (reverse of 3306 ).

Layers

Other important part to understand how the proxy works is to understand its layers. I am gonna show you a diagram that can be found on its official documentation:

+-------------------------+

| 1. RUNTIME |

+-------------------------+

/|\ |

| |

| |

| \|/

+-------------------------+

| 2. MEMORY |

+-------------------------+

/|\ |

| |

| |

| \|/

+-------------------------+

| 3. DISK |

+-------------------------+

ProxySQL will always read information from 1.Runtime layer, which is stored in memory.

Every time we connect to the admin port (6032) we are manipulating information from layer 2.Memory. As the name infers, its also stored in memory.

We then have the layer 3.Disk. As the other two layers are stored in memory, we need a layer to persist information across service/server restarts.

What is the benefic of this layout?

It allows us to manipulate different areas and apply the changes at once. We can think about how a transaction works, where we run multiple queries and commit them at once. When we alter something, we will be manipulating the Memory layer, then we will run a command LOAD MYSQL [SERVERS | USERS | QUERY RULES] TO RUNTIME to load this information to runtime and we will save the information to disk layer by issuing SAVE MYSQL [SERVERS | USERS | QUERY RULES] TO DISK.

Hostgroups

ProxySQL group servers in something named hostgroup. In a topology which we have a master and two slaves, we will create a hostgroup(HG) 1 and specify that our master is part of that HG and we will create a HG 2 and specify that both slaves belong to that HG. Hostgroup creation is done at the time we specify servers on mysql_servers table. There is not fixed enumeration, you can create your HG with any ID you want.

Later we will configure user and queries to identify if the query coming in is a read it should be answered by one of the servers from HG 2 (where we configured our slaves). If the query is not a read, then our server on HG 1 will receive it.

Authentication

ProxySQL has functionalities like firewall, in which it has the capability of blocking a query even before it reaches our backend server. To do it it’s required to have the user authentication module also present on the proxy side. So we will be required to create all users that we wish to connect via ProxySQL also create on the proxy side.

Now that you understand a bit of the basic of how ProxySQL works, you can start playing with it.

How to drop a column in mysql table

In this 101 article, I will show how to drop/remove a column from a table in MySQL.

In this article I will use an example table:

CREATE TABLE tb( c1 INT PRIMARY KEY, c2 char(1), c3 varchar(2) ) ENGINE=InnoDB;

To remove a column we will make use of ALTER TABLE command:

ALTER TABLE tb DROP COLUMN c2;

The command allows you to remove multiple columns at once:

ALTER TABLE tb DROP COLUMN c2, DROP COLUMN c3;

If you are running MySQL 5.6 onwards, you can make this operation online, allowing other sessions to read and write to your table while the operation is been performed:

ALTER TABLE tb DROP COLUMN c2, ALGORITHM=INPLACE, LOCK=NONE;

Reference:

https://dev.mysql.com/doc/refman/5.7/en/alter-table.html

https://dev.mysql.com/doc/refman/5.7/en/innodb-create-index-overview.html

Understanding ProxySQL

In this post, I will cover a bit of ProxySQL. ProxySQL is a proxy which implements MySQL protocol, allowing it to do things that other proxies cannot do. ProxySQL is gaining a lot of traction nowadays and it’s capable to integrate with a variety of products from MySQL ecosystems, such as replication (master – slave / master – master), Percona XtraDB Cluster and Group Replication.

One of its many functionalities (which IMHO makes it awesome) is the ability to do read/write split seamless to the application. You can start sending reads to your slave without doing a single line of code.

In this article, I will cover a few important points to understand how it works.

Instalation

The instalation is easy, you can do it by downloading the corresponding package for your OS from its official github repo https://github.com/sysown/proxysql/releases

sudo yum install https://github.com/sysown/proxysql/releases/download/v1.4.9/proxysql-1.4.9-1-centos7.x86_64.rpm

Now we just need to start the service

sudo service proxysql start

Interfaces

ProxySQL splits application interface from the admin interface. It will listen on 2 network ports. Admin will be on 6032 and application will listen on 6033 (reverse of 3306 ).

Layers

Other important part to understand how the proxy works is to understand its layers. I am gonna show you a diagram that can be found on its official documentation:

+-------------------------+

| 1. RUNTIME |

+-------------------------+

/|\ |

| |

| |

| \|/

+-------------------------+

| 2. MEMORY |

+-------------------------+

/|\ |

| |

| |

| \|/

+-------------------------+

| 3. DISK |

+-------------------------+

ProxySQL will always read information from 1.Runtime layer, which is stored in memory.

Every time we connect to the admin port (6032) we are manipulating information from layer 2.Memory. As the name infers, its also stored in memory.

We then have the layer 3.Disk. As the other two layers are stored in memory, we need a layer to persist information across service/server restarts.

What is the benefic of this layout?

It allows us to manipulate different areas and apply the changes at once. We can think about how a transaction works, where we run multiple queries and commit them at once. When we alter something, we will be manipulating the Memory layer, then we will run a command LOAD MYSQL [SERVERS | USERS | QUERY RULES] TO RUNTIME to load this information to runtime and we will save the information to disk layer by issuing SAVE MYSQL [SERVERS | USERS | QUERY RULES] TO DISK.

Hostgroups

ProxySQL group servers in something named hostgroup. In a topology which we have a master and two slaves, we will create a hostgroup(HG) 1 and specify that our master is part of that HG and we will create a HG 2 and specify that both slaves belong to that HG. Hostgroup creation is done at the time we specify servers on mysql_servers table. There is not fixed enumeration, you can create your HG with any ID you want.

Later we will configure user and queries to identify if the query coming in is a read it should be answered by one of the servers from HG 2 (where we configured our slaves). If the query is not a read, then our server on HG 1 will receive it.

Authentication

ProxySQL has functionalities like firewall, in which it has the capability of blocking a query even before it reaches our backend server. To do it it’s required to have the user authentication module also present on the proxy side. So we will be required to create all users that we wish to connect via ProxySQL also create on the proxy side.

Now that you understand a bit of the basic of how ProxySQL works, you can start playing with it.

Analyze MySQL & MariaDB Error Log Messages using Monyog

The MySQL error log is an essential part of database server performance monitoring. Whenever something goes wrong or performance degrades, the Error Logs are usually the first place we look to start troubleshooting.

The MySQL Error Log is one of three related log types:

- The Error Log: It contains information about errors that occur while the server is running (as well as server start and stop events).

- The General Query Log: This is a general record of what mysqld is doing (connect, disconnect, queries).

- The Slow Query Log: It consists of “slow” SQL statements as defined in the long_query_time global variable.

You can enable error log monitoring to allow Monyog to keep an eye on your MySQL Error Log, and notify you when something goes awry. Moreover, Monyog combines the General Query, Slow Query and Error logs in a single view for both network and cloud servers. For example, in the case of Amazon RDS, Monyog utilizes the Amazon RDS Application Programming Interface (API).

In the Error Logging in MySQL 8 blog article, we were introduced to MySQL’s new component-based architecture. This new architectural model is more modular because components may only interact with each other through the services they provide. Services provided by components are available to the server as well as to other components.

These changes have added to error logging flexibility. Case in point, log messages may now be output with a particular format and output either to a file or the system log.

The improved flexibility correlates to an equal increase configuration complexity as a log component can be a filter or a sink:

- A filter processes log events, to add, remove, or modify individual event fields, or to delete events entirely.

- A sink is a destination (writer) for log events. Typically, a sink processes log events into log messages that have a particular format and writes these messages to its associated output, such as a file or the system log.

For users of Monyog, these complexities are a non-issue, as Monyog makes error log monitoring a straight-forward process of entering log details on the Settings screen and then viewing output on the Monitors screen.

This blog will describe how to configure your MySQL/MariaDB Error Log settings, view output, and glean useful insights from error messages.

What Goes in the Error Log?

The MySQL Error Log may contain messages of varying severities. It collects messages that require persistence beyond that of a single connection or the server’s runtime, that have an intended audience of the DBA or system administrator, as opposed to the application developer. Though the latter may sometimes find the Error Log contents instructive as well. Examples of Error Log entries include exhaustion of resources on the host machine, certain errors reported by the host operating system, stack traces of crashes, messages about damaged databases and indexes, errors on start-up, failed or refused connections, etc.

The error log contains a record of mysqld startup and shutdown times. It also contains diagnostic messages such as errors, warnings, and notes that occur during server startup and shutdown, and while the server is running. For example, if mysqld comes across a table that needs to be checked or repaired, it writes a message to the error log.

On some operating systems, the error log contains a stack trace if mysqld exits abnormally. The trace can be used to determine where mysqld exited.

Configuring Error Log Settings

Monitoring the error log is crucial as changes to the log are indicative of disastrous outages. Monyog makes the task of monitoring the error log very simple. All one needs to do is configure Monyog, and it will take care of the rest! Monyog will alert you of changes in the error log, and in addition, if there is an entry of type [ERROR] in the log, Monyog will extract the corresponding message and send an email it to designated persons.

The Error Log is disabled in MySQL and MariaDB by default, so we have to enable it before Monyog can read and analyze it. To do that:

- Navigate to the SERVERS screen and click on the ellipsis […] to open the context menu (#1 in the image below).

- Choose the Edit Server item from the context menu (#2 in the image below).

-

On the Server Settings dialog (#3 in the image below):

-

- Click on the ADVANCED header to bring up the advanced server settings (#3A in the image below).

- Select MySQL Server Log from the tree on the left-hand side of the dialog (#3B in the image below).

-

To configure error log monitoring:

-

To Click the Enable error log monitoring toggle switch so that the slider moves to the right. The background color will also go from grey to blue:

![]()

- You can either enter the error log FILE PATH manually, or you let Monyog get the path of the error log from the MySQL server for you. Just click on the Fetch button to the right of the FILE PATH text field, and Monyog will locate the file!

-

The READ FILE FROM field sets how Monyog will access the Error Log File. There are 3 ways of accessing the log files:

- Select Local path if the Error Log File resides on the same machine that Monyog is running on or on a shared network drive.

- Choose Via SFTP if you have configured Monyog to use SSH. You’ll then have to supply your SSH details.

-

Select RDS/Aurora (Using API) if your server is an RDS/Aurora instance. For file-based logging, you’ll have to fill four additional fields, as follows:

- DB instance identifier: A unique name to identify your RDS/Aurora instance.

- Instance region: The region in which your instance is hosted, e.g: “us-east-1”

- Access key ID: It is a 20 character long key ID which can be created from the AWS Management console. It is used to make programmatic requests to AWS.

- Secret access key: It is 40 characters long and can be created from the AWS Management console.

-

Click the TEST READING THE FILE button to check if Monyog can access the file specified in the File path. A message will appear in the bottom right corner of the browser window:

![]()

- You can APPLY THE SETTING TO Only this server or All servers with tags same as this server.

Click the SAVE button to save your Server Settings and close the dialog.

Viewing the Error Log File

MySQL Log data is combined on the MySQL Logs screen. Like all monitors, it can show log information for multiple servers. The MySQL Logs screen is accessible via the Monitors icon (on the left-hand toolbar) > MySQL Logs (in the MONITOR GROUP list).

The MySQL Log screen displays multiple servers side-by-side:

This MySQL Log screen shows the local Sakila server:

The MySQL Error Log occupies the first three rows of the MONITORS table:

- MONyog able to read MySQL error log?: A Yes/No value indicating whether Monyog is able to access the MySQL error log. If No is indicated, go back to the Error Log Settings screen and make sure that Error Log monitoring is enabled and that Monyog can read the file.

- New entries in error log?: This Yes/No value indicates whether the error log has changed during the selected TIMEFRAME.

- Recent entries of type [ERROR]: MySQL logs three kinds of Log entries – Notes, Warnings, and Errors. Any log entries recorded with an [ERROR] type are displayed here.

Getting the Most out of the MySQL Log Screen

The MySQL Log Screen does a lot more than display server errors. In addition to alerting you of changes in the error log, Monyog provides useful information about each monitor, as well as historical data. It can even extract the message from [ERROR] entries and send them to you via email.

Monitor Editing

Monitor names are actually clickable links that open the associated EDIT MONITOR screen at the right of the screen. It’s a screen where you can view and modify monitor parameters.

Here’s a description of the form fields. Mandatory fields are marked with an asterisk (*):

- Name*: The name of the monitor.

- Monitor Group*: The monitor group that this monitor belongs to.

- Type of counter: Choices include MySQL, System, or Custom SQL.

- MySQL or System indicate that this Monitor displays MySQL or system-related information respectively, while Custom SQL indicates that this Monitor is based on a Custom SQL Object. Only MySQL is applicable to existing Error Log monitors.

- Enabled?: A toggle switch indicating whether or not the monitor is enabled. Moving the slider to the right enables the monitor while moving it to the left turns the monitor off. The background color will also go from grey to blue when the monitor is enabled.

- Formula: A MySQL server parameter on which the value of this counter is based. None are applicable to the Error Log, so this field should normally be left blank.

- Value: This specifies a JavaScript function that computes the value. For example, this code fetches the last Error Log entry:

-

function x()

{

val = MONyog.MySQL.ErrorLog.Last_error;

if(typeof val == “undefined” || val == 0 || val == “”)

return “(n/a)”;

else

return val;

} - Description: A detailed summary of the monitor.

- Advice text: Provides additional information about the monitor, such as how to interpret its output as well as how to correct potential problems.

Setting Alerts

Monyog tells you that a monitor reading could be pointing to a potential issue to investigate by placing a Red or Yellow alert next to it. For example, here is a Critical alert next to a Log Error entry:

Alert settings are configured on the Alerts tab of the EDIT MONITOR screen.

The Email Recipients textbox accepts a comma-delimited list of email addresses to send notifications to when an alert is triggered. Clicking the Advanced link divides recipients into Critical and Warning lists.

You can turn Notifications on and off via the Notifications slider control. Note that you also have to configure SMTP or(and) SNMP for MONyog and Notification Settings for servers for this feature to work.

You can also override the notification settings via the Override notify when stable? and Override notify till stable? fields. Each includes radio buttons for “Yes”, “No”, and “Disable”. If set to “Disable”, this variable will not override the server-level setting.

When notifications are enabled for a monitor, the Notifications enabled icon appears beside the monitor name:

![]()

Viewing Historical Trend Values

The term “Trend Analysis” refers to the concept of collecting information in an effort to spot a pattern, or trend, in the data. With respect to database administration, analyzing history reports gives a way to track trends and identify problem areas in your infrastructure. The idea is that, by using historical information to find recurring problems, you may be able to prevent future issues.

Each server column cell has a History trend value icon on the right-hand side. Clicking it displays historical trend data for the selected TIMEFRAME.

![]()

Clicking the SHOW ONLY CHANGED VALUES slider toggles between all log entries and only those where the value has changed.

Conclusion

An essential part of database server performance monitoring, the MySQL error log is usually the first place we look to start troubleshooting database issues. In today’s blog, we learned how Monyog facilitates error log monitoring on MySQL and MariaDB servers by combining all of the log output together on one screen. Moreover, Monyog offers additional value by providing features such as Alerts, Notifications, and History Trend Values.

The post Analyze MySQL & MariaDB Error Log Messages using Monyog appeared first on Monyog Blog.

Impact of sharding on query performance in MySQL Cluster

A new week of blogs about our development in MySQL Cluster 7.6.

After working a long time on a set of new developments, there is a lot

of things to describe. I will continue this week with discussing sharding

and NDB, a new cloud feature in 7.6 and provide some benchmark

results on restart performance in 7.6 compared to 7.5. I am also planning

a comparative analysis for a few more versions of NDB.

In the blog serie I have presented recently we have displayed

the performance impact of various new features in MySQL Cluster

7.5 and 7.6. All these benchmarks were executed with tables that

used 1 partition. The idea behind this is that to develop a

scalable application it is important to develop partition-aware

applications.

A partition-aware application will ensure that all partitions

except one is pruned away from the query. Thus they get the same

performance as a query on a single-partition table.

Now in this blog we analyse the difference on using 1 partition

per table and using 8 partitions per table.

The execution difference is that with 8 partitions we have to

dive into the tree 8 times instead of one time and we have to

take the startup cost of the scan as well. At the same time

using 8 partitions means that we get some amount of parallelism

in the query execution and this speeds up query execution during

low concurrency.

Thus there are two main difference with single-partition scans

and multi-partition scans.

The first difference is that the parallelism decreases the latency

of query execution at low concurrency. More partitions means a higher

speedup.

The second difference is that the data node will spend more CPU to

execute the query for multi-partition scans compared to single-partition

scans.

Most of the benchmarks I have shown are limited by the cluster connection

used. Thus we haven't focused so much on the CPU usage in data nodes.

Thus in the graph above the improvement of query speed is around 20% at

low concurrency. The performance difference for other concurrency levels

is small, the multi-partition scans uses more CPU. The multi-partition

scans is though a bit more variable in its throughput.

Tests where I focused more on data node performance showed around 10%

overhead for multi-partition scans compared to single-partition scans

in a similar setup.

An interesting observation is that although most of the applications

should be developed with partition-aware queries, those queries that

are not pruned to one partition will be automatically parallelised.

This is the advantage of the MySQL Cluster auto-sharded architecture.

In a sharded setup using any other DBMS it is necessary to ensure that

all queries are performed in only one shard since there are no automatic

queries over many shards. This means that partition-aware queries will

be ok to handle in only one data server, but the application will have to

calculate where this data server resides. Cross-shard queries have to be

automatically managed though, both sending queries in parallel to

many shards and merging the results from many shards.

With NDB all of this is automatic. If the query is partition-aware,

it will be automatically directed to the correct shard (node group

in NDB). If the query isn't partition-aware and thus a cross-shard

query, it is automatically parallelised. It is even possible to

push join queries down into the NDB data nodes to execute the

join queries using a parallel linked-join algorithm.

As we have shown in earlier blogs and will show even more in coming

blogs NDB using the Read Backup feature will ensure that read queries

are directed to a data node that is as local as possible to the MySQL

Server executing the query. This is true also for join queries being pushed

down to the NDB data nodes.

MySQL 8.0 Data Dictionary

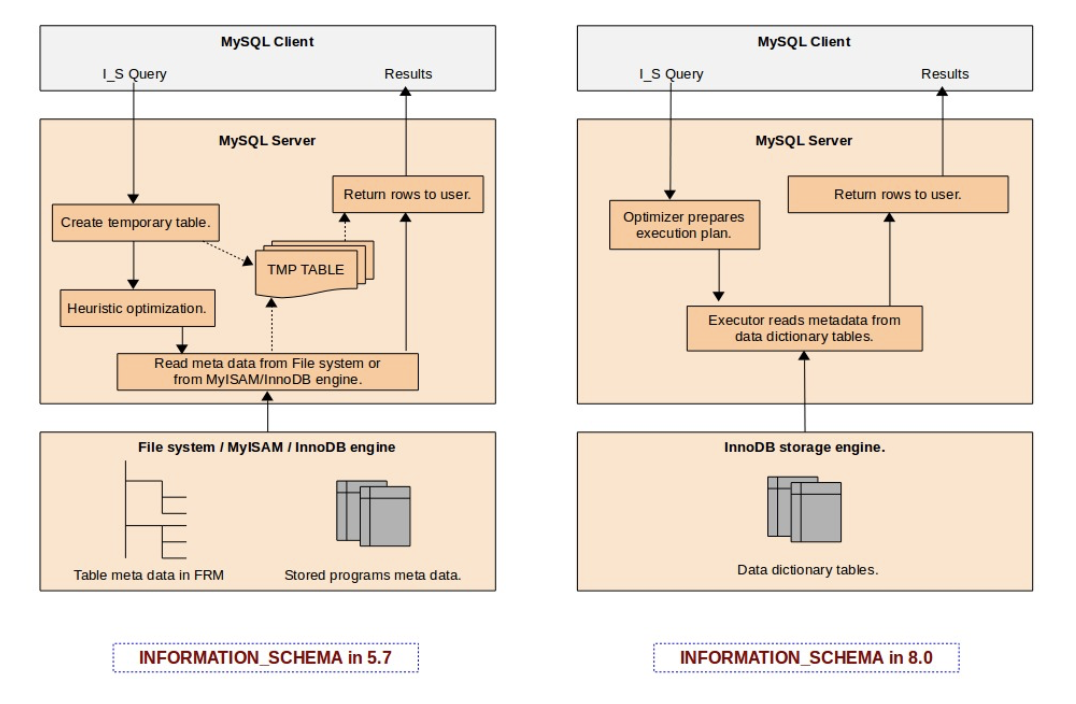

We are all familiar with “.frm” files since the earliest days of MySQL, The community has been continuously requesting for replacement of file-system based metadata for several good reasons, So with MySQL 8.0 “.frm” files are gone for ever, Going forward MySQL stores table metadata in the data dictionary tables which uses InnoDB storage engine. This blog is about MySQL 8.0 data dictionary and how it creates value for MySQL going forward:

How file based metadata management used to work in the past (before MySQL 8.0) ?

- Every table in MySQL will have corresponding .frm file, This .frm file stores information like column names and data-types in the binary format, In addition to the .frm file, there are .trn, .trg and .par files to support triggers, trigger namespace and partitioning .

What are major bottlenecks faced due to the usage of file based metadata management ?

- Operationally it always appeared very irrational, Why we need to have an separate mechanism to track the schema information ? Originally this was the idea from Drizzle – Drizzle made it very clear (almost ) that it should get out of the way and let the storage engines be the storage engines and not try to second guess them or keep track of things behind their back.

- Dictionaries out of synch.– Before MySQL 8.0, the data dictionary is a “split brain”, where the “server” and InnoDB have their own separate data dictionary, where some information duplicated. Information that is duplicated in the MySQL server dictionary and the InnoDB dictionary might get out of synch, and we need one common “source of truth” for dictionary information.

- INFORMATION_SCHEMA is the bottleneck– The main reason behind these performance issues in the INFORMATION_SCHEMA (before MySQL 8.0) implementation is that INFORMATION_SCHEMA tables are implemented as temporary tables that are created on-the-fly during query execution. For a MySQL server having hundreds of databases, each with hundreds of tables within them, the INFORMATION_SCHEMA query would end-up doing lot of I/O reading each individual FRM files from the file system. And it would also end-up using more CPU cycles in effort to open the table and prepare related in-memory data structures. It does attempt to use the MySQL server table cache (the system variable ‘table_definition_cache‘), however in large server instances it’s very rare to have a table cache that is large enough to accommodate all of these tables.

- No atomic DDL– Storing the data dictionary in non-transactional tables and files, means that DDLs are unsafe for replication (they are not transactional, not even atomic). If a compound DDL fails we still need to replicate it and hope that it fails with the same error. This is a best effort approach and there is a lot of logic coded to handle this . It is hard to maintain, slows down progress and bloats the replication codebase. The data dictionary is stored partly in non-transactional tables. These are not safe for replication building resilient HA systems on top of MySQL. For instance, some dictionary tables need to be manipulated using regular DML, which causes problems for GTIDs.

- Crash recovery. Since the DDL statements are not atomic, it is challenging to recover after crashing in the middle of a DDL execution, and is especially problematic for replication.

How things are changed with MySQL 8.0 ?

MySQL 8.0 introduced a native data dictionary based on InnoDB. This change has enabled us to get rid of file-based metadata store (FRM files) and also help MySQL to move towards supporting transactional DDL. We have now the metadata of all database tables stored in transactional data dictionary tables, it enables us to design an INFORMATION_SCHEMA table as a database VIEW over the data dictionary tables. This eliminates costs such as the creation of temporary tables for each INFORMATION_SCHEMA query during execution on-the-fly, and also scanning file-system directories to find FRM files. It is also now possible to utilize the full power of the MySQL optimizer to prepare better query execution plans using indexes on data dictionary tables.

The following diagram (Source: MySQL server team blog) explains the difference in design in MySQL 5.7 and 8.0 :

The post MySQL 8.0 Data Dictionary appeared first on MySQL Consulting, Support and Remote DBA Services By MinervaDB.

ProxySQL Experimental Feature: Native ProxySQL Clustering

ProxySQL 1.4.2 introduced native clustering, allowing several ProxySQL instances to communicate with and share configuration updates with each other. In this blog post, I’ll review this new feature and how we can start working with 3 nodes.

Before I continue, let’s review two common methods to installing ProxySQL.

ProxySQL as a centralized server

This is the most common installation, where ProxySQL is between application servers and the database. It is simple, but without any high availability. If ProxySQL goes down you lose all connectivity to the database.

ProxySQL on app instances

Another common setup is to install ProxySQL onto each application server. This is good because the loss of one ProxySQL/App server will not bring down the entire application.

For more information about the previous installation, please visit this link Where Do I Put ProxySQL?

Sometimes our application and databases grow fast. Maybe you need add a loadbalancer, for example, and in that moment you start thinking … “What could I do to configure and maintain all these ProxySQL nodes without mistakes?”

To do that, there are many tools like Ansible, Puppet, and Chef, but you will need write/create/maintain scripts to do those tasks. This is really difficult to administer for one person.

Now, there is a native solution, built into ProxySQL, to create and administer a cluster in an easy way.

At the moment this feature is EXPERIMENTAL and subject to change. Think very carefully before installing it in production, in fact I strongly recommend you wait. However, if you would like to start testing this feature, you need to install ProxySQL 1.4.2, or better.

This clustering feature is really useful if you have installed one ProxySQL per application instance, because all the changes in one of the ProxySQL nodes will be propagated to all the other ProxySQL nodes. You can also configure a “master-slave” style setup with ProxySQL clustering.

There are only 4 tables where you can make changes and propagate the configuration:

- mysql_query_rules

- mysql_servers

- mysql_users

- proxysql_servers

How does it work?

It’s easy. When you make a change like INSERT/DELETE/UPDATE on any of these tables, after running the command

LOAD … TO RUNTIME, ProxySQL creates a new checksum of the table’s data and increments the version number in the table runtime_checksums_values. Below we can see an example.

admin ((none))>SELECT name, version, FROM_UNIXTIME(epoch), checksum FROM runtime_checksums_values ORDER BY name; +-------------------+---------+----------------------+--------------------+ | name | version | FROM_UNIXTIME(epoch) | checksum | +-------------------+---------+----------------------+--------------------+ | admin_variables | 0 | 1970-01-01 00:00:00 | | | mysql_query_rules | 1 | 2018-04-26 15:58:23 | 0x0000000000000000 | | mysql_servers | 1 | 2018-04-26 15:58:23 | 0x0000000000000000 | | mysql_users | 4 | 2018-04-26 18:36:12 | 0x2F35CAB62143AE41 | | mysql_variables | 0 | 1970-01-01 00:00:00 | | | proxysql_servers | 1 | 2018-04-26 15:58:23 | 0x0000000000000000 | +-------------------+---------+----------------------+--------------------+

Internally, all nodes are monitoring and communicating with all the other ProxySQL nodes. When another node detects a change in the checksum and version (both at the same time), each node will get a copy of the table that was modified, make the same changes locally, and apply the new config to RUNTIME to refresh the new config, make it visible to the applications connected and automatically save it to DISK for persistence.

The following setup creates a “synchronous cluster” so any changes to these 4 tables on any ProxySQL server will be replicated to all other ProxySQL nodes. Be careful!

How can I start testing this new feature?

1) To start we need to get at least 2 nodes. Download and install ProxySQL 1.4.2 or higher and start a clean version.

2) On all nodes, we need to update the following global variables. These changes will set the username and password used by each node’s internal communication to cluster1/clusterpass. These must be the same on all nodes in this cluster.

update global_variables set variable_value='admin:admin;cluster1:clusterpass' where variable_name='admin-admin_credentials'; update global_variables set variable_value='cluster1' where variable_name='admin-cluster_username'; update global_variables set variable_value='clusterpass' where variable_name='admin-cluster_password'; update global_variables set variable_value=200 where variable_name='admin-cluster_check_interval_ms'; update global_variables set variable_value=100 where variable_name='admin-cluster_check_status_frequency'; update global_variables set variable_value='true' where variable_name='admin-cluster_mysql_query_rules_save_to_disk'; update global_variables set variable_value='true' where variable_name='admin-cluster_mysql_servers_save_to_disk'; update global_variables set variable_value='true' where variable_name='admin-cluster_mysql_users_save_to_disk'; update global_variables set variable_value='true' where variable_name='admin-cluster_proxysql_servers_save_to_disk'; update global_variables set variable_value=3 where variable_name='admin-cluster_mysql_query_rules_diffs_before_sync'; update global_variables set variable_value=3 where variable_name='admin-cluster_mysql_servers_diffs_before_sync'; update global_variables set variable_value=3 where variable_name='admin-cluster_mysql_users_diffs_before_sync'; update global_variables set variable_value=3 where variable_name='admin-cluster_proxysql_servers_diffs_before_sync'; load admin variables to RUNTIME; save admin variables to disk;

3) Add all IPs from the other ProxySQL nodes into each other node:

INSERT INTO proxysql_servers (hostname,port,weight,comment) VALUES ('10.138.180.183',6032,100,'PRIMARY');

INSERT INTO proxysql_servers (hostname,port,weight,comment) VALUES ('10.138.244.108',6032,99,'SECONDARY');

INSERT INTO proxysql_servers (hostname,port,weight,comment) VALUES ('10.138.244.244',6032,98,'SECONDARY');

LOAD PROXYSQL SERVERS TO RUNTIME;

SAVE PROXYSQL SERVERS TO DISK;

At this moment, we have all nodes synced.

In the next example from the log file, we can see when node1 detected node2.

[root@proxysql1 ~]# $ tail /var/lib/proxysql/proxysql.log ... 2018-05-10 11:19:51 [INFO] Cluster: Fetching ProxySQL Servers from peer 10.138.244.108:6032 started 2018-05-10 11:19:51 [INFO] Cluster: Fetching ProxySQL Servers from peer 10.138.244.108:6032 completed 2018-05-10 11:19:51 [INFO] Cluster: Loading to runtime ProxySQL Servers from peer 10.138.244.108:6032 2018-05-10 11:19:51 [INFO] Destroyed Cluster Node Entry for host 10.138.148.242:6032 2018-05-10 11:19:51 [INFO] Cluster: Saving to disk ProxySQL Servers from peer 10.138.244.108:6032 2018-05-10 11:19:52 [INFO] Cluster: detected a new checksum for proxysql_servers from peer 10.138.180.183:6032, version 6, epoch 1525951191, checksum 0x3D819A34C06EF4EA . Not syncing yet ... 2018-05-10 11:19:52 [INFO] Cluster: checksum for proxysql_servers from peer 10.138.180.183:6032 matches with local checksum 0x3D819A34C06EF4EA , we won't sync. 2018-05-10 11:19:52 [INFO] Cluster: closing thread for peer 10.138.148.242:6032 2018-05-10 11:19:52 [INFO] Cluster: detected a new checksum for proxysql_servers from peer 10.138.244.244:6032, version 4, epoch 1525951163, checksum 0x3D819A34C06EF4EA . Not syncing yet ... 2018-05-10 11:19:52 [INFO] Cluster: checksum for proxysql_servers from peer 10.138.244.244:6032 matches with local checksum 0x3D819A34C06EF4EA , we won't sync ...

Another example is to add users to the table mysql_users. Remember these users are to enable MySQL connections between the application (frontend) and MySQL (backend).

We will add a new username and password on any server; in my test I’ll use node2:

admin proxysql2 ((none))>INSERT INTO mysql_users(username,password) VALUES ('user1','crazyPassword');

Query OK, 1 row affected (0.00 sec)

admin proxysql2 ((none))>LOAD MYSQL USERS TO RUNTIME;

Query OK, 0 rows affected (0.00 sec)

In the log file from node3, we can see the update immediately:

[root@proxysql3 ~]# $ tail /var/lib/proxysql/proxysql.log ... 2018-05-10 11:30:57 [INFO] Cluster: detected a new checksum for mysql_users from peer 10.138.244.108:6032, version 2, epoch 1525951873, checksum 0x2AF43564C9985EC7 . Not syncing yet ... 2018-05-10 11:30:57 [INFO] Cluster: detected a peer 10.138.244.108:6032 with mysql_users version 2, epoch 1525951873, diff_check 3. Own version: 1, epoch: 1525950968. Proceeding with remote sync 2018-05-10 11:30:57 [INFO] Cluster: detected a peer 10.138.244.108:6032 with mysql_users version 2, epoch 1525951873, diff_check 4. Own version: 1, epoch: 1525950968. Proceeding with remote sync 2018-05-10 11:30:57 [INFO] Cluster: detected peer 10.138.244.108:6032 with mysql_users version 2, epoch 1525951873 2018-05-10 11:30:57 [INFO] Cluster: Fetching MySQL Users from peer 10.138.244.108:6032 started 2018-05-10 11:30:57 [INFO] Cluster: Fetching MySQL Users from peer 10.138.244.108:6032 completed 2018-05-10 11:30:57 [INFO] Cluster: Loading to runtime MySQL Users from peer 10.138.244.108:6032 2018-05-10 11:30:57 [INFO] Cluster: Saving to disk MySQL Query Rules from peer 10.138.244.108:6032 2018-05-10 11:30:57 [INFO] Cluster: detected a new checksum for mysql_users from peer 10.138.244.244:6032, version 2, epoch 1525951857, checksum 0x2AF43564C9985EC7 . Not syncing yet ... 2018-05-10 11:30:57 [INFO] Cluster: checksum for mysql_users from peer 10.138.244.244:6032 matches with local checksum 0x2AF43564C9985EC7 , we won't sync. 2018-05-10 11:30:57 [INFO] Cluster: detected a new checksum for mysql_users from peer 10.138.180.183:6032, version 2, epoch 1525951886, checksum 0x2AF43564C9985EC7 . Not syncing yet ... 2018-05-10 11:30:57 [INFO] Cluster: checksum for mysql_users from peer 10.138.180.183:6032 matches with local checksum 0x2AF43564C9985EC7 , we won't sync. ...

What happens if some node is down?

In this example, we will see and find out what happens if one node is down or has a network glitch, or other issue. I’ll stop ProxySQL node3:

[root@proxysql3 ~]# service proxysql stop Shutting down ProxySQL: DONE!

On ProxySQL node1, we can check that node3 is unreachable:

[root@proxysql1 ~]# tailf /var/lib/proxysql/proxysql.log 2018-05-10 11:57:33 ProxySQL_Cluster.cpp:180:ProxySQL_Cluster_Monitor_thread(): [WARNING] Cluster: unable to connect to peer 10.138.244.244:6032 . Error: Can't connect to MySQL server on '10.138.244.244' (107) 2018-05-10 11:57:33 ProxySQL_Cluster.cpp:180:ProxySQL_Cluster_Monitor_thread(): [WARNING] Cluster: unable to connect to peer 10.138.244.244:6032 . Error: Can't connect to MySQL server on '10.138.244.244' (107) 2018-05-10 11:57:33 ProxySQL_Cluster.cpp:180:ProxySQL_Cluster_Monitor_thread(): [WARNING] Cluster: unable to connect to peer 10.138.244.244:6032 . Error: Can't connect to MySQL server on '10.138.244.244' (107)

And another check can be run in any ProxySQL node like node2, for example:

admin proxysql2 ((none))>SELECT hostname, checksum, FROM_UNIXTIME(changed_at) changed_at, FROM_UNIXTIME(updated_at) updated_at FROM stats_proxysql_servers_checksums WHERE name='proxysql_servers' ORDER BY hostname; +----------------+--------------------+---------------------+---------------------+ | hostname | checksum | changed_at | updated_at | +----------------+--------------------+---------------------+---------------------+ | 10.138.180.183 | 0x3D819A34C06EF4EA | 2018-05-10 11:19:39 | 2018-05-10 12:01:59 | | 10.138.244.108 | 0x3D819A34C06EF4EA | 2018-05-10 11:19:38 | 2018-05-10 12:01:59 | | 10.138.244.244 | 0x3D819A34C06EF4EA | 2018-05-10 11:19:39 | 2018-05-10 11:56:59 | +----------------+--------------------+---------------------+---------------------+ 3 rows in set (0.00 sec)

In the previous result, we can see node3 (10.138.244.244) is not being updated; the column updated_at should have a later datetime. This means that node3 is not running (or is down or network glitch).

At this point, any change to any of the tables, mysql_query_rules, mysql_servers, mysql_users, proxysql_servers, will be replicated between nodes 1 & 2.

In this next example, while node3 is offline, we will add another user to mysql_users table.

admin proxysql2 ((none))>INSERT INTO mysql_users(username,password) VALUES ('user2','passwordCrazy');

Query OK, 1 row affected (0.00 sec)

admin proxysql2 ((none))>LOAD MYSQL USERS TO RUNTIME;

Query OK, 0 rows affected (0.00 sec)

That change was propagated to node1:

[root@proxysql3 ~]# $ tail /var/lib/proxysql/proxysql.log ... 2018-05-10 12:12:36 [INFO] Cluster: detected a peer 10.138.244.108:6032 with mysql_users version 3, epoch 1525954343, diff_check 4. Own version: 2, epoch: 1525951886. Proceeding with remote sync 2018-05-10 12:12:36 [INFO] Cluster: detected peer 10.138.244.108:6032 with mysql_users version 3, epoch 1525954343 2018-05-10 12:12:36 [INFO] Cluster: Fetching MySQL Users from peer 10.138.244.108:6032 started 2018-05-10 12:12:36 [INFO] Cluster: Fetching MySQL Users from peer 10.138.244.108:6032 completed 2018-05-10 12:12:36 [INFO] Cluster: Loading to runtime MySQL Users from peer 10.138.244.108:6032 2018-05-10 12:12:36 [INFO] Cluster: Saving to disk MySQL Query Rules from peer 10.138.244.108:6032 ...

We keep seeing node3 is out of sync about 25 minutes ago.

admin proxysql2 ((none))>SELECT hostname, checksum, FROM_UNIXTIME(changed_at) changed_at, FROM_UNIXTIME(updated_at) updated_at FROM stats_proxysql_servers_checksums WHERE name='mysql_users' ORDER BY hostname; +----------------+--------------------+---------------------+---------------------+ | hostname | checksum | changed_at | updated_at | +----------------+--------------------+---------------------+---------------------+ | 10.138.180.183 | 0x3D819A34C06EF4EA | 2018-05-10 11:19:39 | 2018-05-10 12:21:35 | | 10.138.244.108 | 0x3D819A34C06EF4EA | 2018-05-10 11:19:38 |2018-05-10 12:21:35 | | 10.138.244.244 | 0x3D819A34C06EF4EA | 2018-05-10 11:19:39 |2018-05-10 12:21:35 | +----------------+--------------------+---------------------+---------------------+ 3 rows in set (0.00 sec)

Let’s start node3 and check if the sync works. node3 should connect to the other nodes and get the last changes.

[root@proxysql3 ~]# tail /var/lib/proxysql/proxysql.log ... 2018-05-10 12:30:02 [INFO] Cluster: detected a peer 10.138.244.108:6032 with mysql_users version 3, epoch 1525954343, diff_check 3. Own version: 1, epoch: 1525955402. Proceeding with remote sync 2018-05-10 12:30:02 [INFO] Cluster: detected a peer 10.138.180.183:6032 with mysql_users version 3, epoch 1525954356, diff_check 3. Own version: 1, epoch: 1525955402. Proceeding with remote sync … 2018-05-10 12:30:03 [INFO] Cluster: detected peer 10.138.180.183:6032 with mysql_users version 3, epoch 1525954356 2018-05-10 12:30:03 [INFO] Cluster: Fetching MySQL Users from peer 10.138.180.183:6032 started 2018-05-10 12:30:03 [INFO] Cluster: Fetching MySQL Users from peer 10.138.180.183:6032 completed 2018-05-10 12:30:03 [INFO] Cluster: Loading to runtime MySQL Users from peer 10.138.180.183:6032 2018-05-10 12:30:03 [INFO] Cluster: Saving to disk MySQL Query Rules from peer 10.138.180.183:6032

Looking at the status from the checksum table, we can see node3 is now up to date.

admin proxysql2 ((none))>SELECT hostname, checksum, FROM_UNIXTIME(changed_at) changed_at, FROM_UNIXTIME(updated_at) updated_at FROM stats_proxysql_servers_checksums WHERE name='mysql_users' ORDER BY hostname; +----------------+--------------------+---------------------+---------------------+ | hostname | checksum | changed_at | updated_at | +----------------+--------------------+---------------------+---------------------+ | 10.138.180.183 | 0x3D819A34C06EF4EA | 2018-05-10 11:19:39 | 2018-05-10 12:21:35 | | 10.138.244.108 | 0x3D819A34C06EF4EA | 2018-05-10 11:19:38 |2018-05-10 12:21:35 | | 10.138.244.244 | 0x3D819A34C06EF4EA | 2018-05-10 11:19:39 |2018-05-10 12:21:35 | +----------------+--------------------+---------------------+---------------------+ 3 rows in set (0.00 sec)admin proxysql2 ((none))>SELECT hostname, checksum, FROM_UNIXTIME(changed_at) changed_at, FROM_UNIXTIME(updated_at) updated_at FROM stats_proxysql_servers_checksums WHERE name='mysql_users' ORDER BY hostname; +----------------+--------------------+---------------------+---------------------+ | hostname | checksum | changed_at | updated_at | +----------------+--------------------+---------------------+---------------------+ | 10.138.180.183 | 0x3928F574AFFF4C65 | 2018-05-10 12:12:24 | 2018-05-10 12:31:58 | | 10.138.244.108 | 0x3928F574AFFF4C65 | 2018-05-10 12:12:23 | 2018-05-10 12:31:58 | | 10.138.244.244 | 0x3928F574AFFF4C65 | 2018-05-10 12:30:19 | 2018-05-10 12:31:58 | +----------------+--------------------+---------------------+---------------------+ 3 rows in set (0.00 sec)

Now we have 3 ProxySQL nodes up to date. This example didn’t add any MySQL servers, hostgroups, etc, because the functionality is the same. The post is intended as an introduction to this new feature and how you can create and test a ProxySQL cluster.

Just remember that this is still an experimental feature and is subject to change with newer versions of ProxySQL.

Summary

This feature is really needed if you have more than one ProxySQL running for the same application in different instances. It is easy to maintain and configure for a single person and is easy to create and attach new nodes.

Hope you find this post helpful!

References

http://www.proxysql.com/blog/proxysql-cluster

http://www.proxysql.com/blog/proxysql-cluster-part2

http://www.proxysql.com/blog/proxysql-cluster-part3-mysql-servers

https://github.com/sysown/proxysql/wiki/ProxySQL-Cluster

The post ProxySQL Experimental Feature: Native ProxySQL Clustering appeared first on Percona Database Performance Blog.

MariaDB 10.3 support Oracle mode sequences

Sequences are used to requesting unique values on demand, The best use case of sequences is to have a unique ID. , that can be used across multiple tables. In some cases sequences are really helpful to have an identifier before an actual row is inserted. With the normal way of having an automatically incrementing identifier, the identifier value will only be available after insert of the row and the identifier will only be unique inside its own table. MariaDB Server 10.3 follows the standard and includes compatibility with the way Oracle does sequences introduced in Oracle Database Server on top of the standard.

Simple steps to create a sequence in MariaDB 10.3 onwards, a create statement is used:

MariaDB [MDB101]> CREATE SEQUENCE Seq1_100

-> START WITH 100

-> INCREMENT BY 1;

Query OK, 0 rows affected (0.015 sec)

This creates a sequence that starts at 100 and is incremented with 1 every time a value is requested from the sequence. The sequence will be visible among the tables in the database, i.e. if you run SHOW TABLES it will be there. You can use DESCRIBE on the sequence to see what columns it has.

To test out the usage of sequences let’s create a table:

MariaDB [MDB101]> CREATE TABLE TAB1 (

-> Col1 int(10) NOT NULL,

-> Col2 varchar(30) NOT NULL,

-> Col3 int(10) NOT NULL,

-> PRIMARY KEY (Col1)

-> );

Query OK, 0 rows affected (0.018 sec)

Since we want to use sequences this time, we did not put AUTO_INCREMENT on the Col1 column. Instead we will ask for the next value from the sequence in the INSERT statements:

MariaDB [MDB101]> INSERT INTO TAB1 (Col1, Col2, Col3) VALUES (NEXT VALUE FOR Seq1_100, 'India', 10); Query OK, 1 row affected (0.011 sec) MariaDB [MDB101]> INSERT INTO TAB1 (Col1, Col2, Col3) VALUES (NEXT VALUE FOR Seq1_100, 'Jakarta', 20); Query OK, 1 row affected (0.008 sec) MariaDB [MDB101]> INSERT INTO TAB1 (Col1, Col2, Col3) VALUES (NEXT VALUE FOR Seq1_100, 'Singapore', 20); Query OK, 1 row affected (0.016 sec) MariaDB [MDB101]> INSERT INTO TAB1 (Col1, Col2, Col3) VALUES (NEXT VALUE FOR Seq1_100, 'Japan', 30); Query OK, 1 row affected (0.007 sec)

Instead of having the NEXT VALUE FOR in each INSERT statement, it could have been the default value of the column in this way:

MariaDB [MDB101]> ALTER TABLE TAB1 MODIFY Col1 int(10) NOT NULL DEFAULT NEXT VALUE FOR Seq1_100; Query OK, 0 rows affected (0.007 sec) Records: 0 Duplicates: 0 Warnings: 0

Running a SELECT over the TAB1 table will look like this:

MariaDB [MDB101]> SELECT * FROM TAB1;; +------+-----------+------+ | Col1 | Col2 | Col3 | +------+-----------+------+ | 100 | India | 10 | | 101 | Jakarta | 20 | | 102 | Singapore | 20 | | 103 | Japan | 30 | +------+-----------+------+ 4 rows in set (0.000 sec)

As we can see the Col1 column has been populated with numbers that start from 100 and are incremented with 1 as defined in the sequence’s CREATE statement. To get the last retrieved number from the sequence PREVIOUS VALUE is used:

MariaDB [MDB101]> SELECT PREVIOUS VALUE FOR Seq1_100; +-----------------------------+ | PREVIOUS VALUE FOR Seq1_100 | +-----------------------------+ | 103 | +-----------------------------+ 1 row in set (0.000 sec)

MariaDB 10.3 shipped another very useful option for sequences is CYCLE, which means that we start again from the beginning after reaching a certain value. For example, if there are 5 phases in a process that are done sequentially and then start again from the beginning, we could easily create a sequence to always be able to retrieve the number of the next phase:

MariaDB [MDB101]> CREATE SEQUENCE Seq1_100_c5

-> START WITH 100

-> INCREMENT BY 1

-> MAXVALUE = 200

-> CYCLE;

Query OK, 0 rows affected (0.012 sec)

The sequence above starts at 100 and is incremented with 1 every time the next value is requested. But when it reaches 200 (MAXVALUE) it will restart from 100 (CYCLE).

We can also set the next value of a sequence, to ALTER a sequence or using sequences in Oracle mode with Oracle specific syntax. To switch to Oracle mode use:

MariaDB [MDB101]> SET SQL_MODE=ORACLE; Query OK, 0 rows affected (0.000 sec)

After that you can retrieve the next value of a sequence in Oracle style:

MariaDB [MDB101]> SELECT Seq1_100.nextval; +------------------+ | Seq1_100.nextval | +------------------+ | 104 | +------------------+ 1 row in set (0.009 sec)

You can read about MariaDB sequences in the documentation, MariaDB documentation

The post MariaDB 10.3 support Oracle mode sequences appeared first on MySQL Consulting, Support and Remote DBA Services By MinervaDB.

Benchmark of new cloud feature in MySQL Cluster 7.6

In previous blogs we have shown how MySQL Cluster can use the Read Backup

feature to improve performance when the MySQL Server and the NDB data

node are colocated.

There are two scenarios in a cloud setup where additional measures are

needed to ensure localized read accesses even when using the Read Backup

feature.

The first scenario is when data nodes and MySQL Servers are not colocated.

In this case by default we have no notion of closeness between nodes in

the cluster.

The second case is when we have multiple node groups and using colocated

data nodes and MySQL Server. In this case we have a notion of closeness

to the data in the node group we are colocated with, but not to other

node groups.

In a cloud setup the closeness is dependent on whether two nodes are in

the same availability domain (availability zone in Amazon/Google) or not.

In your own network other scenarios could exist.

In MySQL Cluster 7.6 we added a new feature where it is possible

to configure nodes to be contained in a certain location domain.

Nodes that are close to each other should be configured to be part of

the same location domain. Nodes belonging to different location domains

are always considered to be further away than the one with the same

location domain.

We will use this knowledge to always use a transaction coordinator placed

in the same location domain and if possible we will always read from a

replica placed in the same location domain as the transaction coordinator.

We use this feature to direct reads to a replica that is contained

in the same availability domain.

This provides a much better throughput for read queries in MySQL Cluster

when the data nodes and MySQL servers span multiple availability domains.

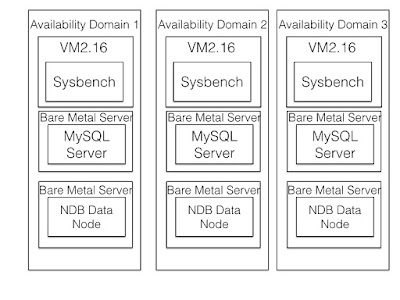

In the figure below we see the setup, each sysbench application is working

against one MySQL Server, both of these are located in the same availability

domain. The MySQL Server works against a set of 3 replicas in the NDB data

nodes. Each of those 3 replicas reside in a different availabilty domain.

The graph above shows the difference between using location domain ids in

this setup compared to not using them. The lacking measurements is missing

simply because there wasn't enough time to complete this particular

benchmark, but the measurements show still the improvements possible and

the improvement is above 40%.

The Bare Metal Server used for data nodes was the DenseIO2 machines and

the MySQL Server used a bare metal server without any attached disks and

not even any block storage is needed in the MySQL Server instances. The

MySQL Servers in an NDB setup are more or stateless, all the required state

is available in the NDB data nodes. Thus it is quite ok to start up a MySQL

Server from scratch all the time. The exception is when the MySQL Server

is used for replicating to another cluster, in this case the binlog state is required

to be persistent on the MySQL Server.

How to drop a column in mysql table

In this 101 article, I will show how to drop/remove a column from a table in MySQL.

In this article I will use an example table:

CREATE TABLE tb( c1 INT PRIMARY KEY, c2 char(1), c3 varchar(2) ) ENGINE=InnoDB;

To remove a column we will make use of ALTER TABLE command:

ALTER TABLE tb DROP COLUMN c2;

The command allows you to remove multiple columns at once:

ALTER TABLE tb DROP COLUMN c2, DROP COLUMN c3;

If you are running MySQL 5.6 onwards, you can make this operation online, allowing other sessions to read and write to your table while the operation is been performed:

ALTER TABLE tb DROP COLUMN c2, ALGORITHM=INPLACE, LOCK=NONE;

Reference:

https://dev.mysql.com/doc/refman/5.7/en/alter-table.html

https://dev.mysql.com/doc/refman/5.7/en/innodb-create-index-overview.html

MySQL 8.0 Group Replication Limitations

We build highly available and fault tolerant MySQL database infrastructure operations for some of the largest internet properties in this planet, Our consulting team spend several hours daily researching on MySQL documentation and MySQL blogs to understand what are the best possible ways we can build optimal, scalable, highly available and reliable database infrastructure operations for planet-scale web properties. The most common approach towards building a fault-tolerant system is to make all the components in the ecosystem redundant, To make it even simple, component can be removed and system should continue to operate as expected. MySQL replication is an proven method to build redundant database infrastructure operations, operationally these systems are highly complex, requiring maintenance and administration of several servers instead of just one, You need Sr. DBAs to manage such systems.

MySQL Group Replication can operate in both single-primary mode with automatic primary election, where only one server accepts updates at a time and multi-primary mode, where all servers can accept updates, even if they are issued concurrently. The built-in group membership service retains view of the group consistent and available for all servers at any given point in time, Servers can leave and join the group and the view is updated accordingly. If servers leave the group unexpectedly, the failure detection mechanism detects this and notifies the group that the view has changed, All this happens automatically !

For any transaction to commit, the majority of group members have to agree on the order of a given transaction in the global sequence of transactions. Decision to commit or abort a transaction is taken by respective servers, but all servers make same decision. In the case of systems built on network partition, When the members of the group are unable to reach agreement on a transaction, the system halt till the issue is resolved, This guarantees split-brain protection mechanism.

All these fault tolerance mechanisms are powered by Group Communication System (GCS) protocols, a group membership service, and completely safe ordered message delivery system. Group Replication is powered by Paxos algorithm (https://en.wikipedia.org/wiki/Paxos_(computer_science)), which acts as the group communication engine.

Till now, we have just posted about the capabilities of Group Replication, Now we have mentioned below (in bullets) about all the limitations of Group Replication:

- set –binlog-checksum=NONE – Group replication cannot benefit from –binlog-checksum due to the design limitation of replication event checksums.

- Gap Locks not supported – The information about gap locks are not available outside InnoDB so certification process cannot acknowledge gap locks .

- Table Locks and Named Locks not supported – Certification process will not acknowledge table / named locks.

- Concurrent DDL versus DML Operations – Concurrent data definition statements and data manipulation statements executing against the same object but on different servers is not supported when using multi-primary mode. During execution of Data Definition Language (DDL) statements on an object, executing concurrent Data Manipulation Language (DML) on the same object but on a different server instance has the risk of conflicting DDL executing on different instances not being detected.

- Very Large Transactions. Individual transactions that result in GTID contents which are large enough that it cannot be copied between group members over the network within a 5 second window can cause failures in the group communication. To avoid this issue try and limit the size of your transactions as much as possible. For example, split up files used with LOAD DATA INFILE into smaller chunks.

- Multi-primary Mode Deadlock. When a group is operating in multi-primary mode, SELECT … FOR UPDATE statements can result in a deadlock. This is because the lock is not shared across the members of the group, therefore the expectation for such a statement might not be reached.

The post MySQL 8.0 Group Replication Limitations appeared first on MySQL Consulting, Support and Remote DBA Services By MinervaDB.

PXC loves firewalls (and System Admins loves iptables)

Let them stay together.

Let them stay together.

In the last YEARS, I have seen quite often that users, when installing a product such as PXC, instead of spending five minutes to understand what to do just run

iptables -Fand save.

In short, they remove any rules for their firewall.

With this post, I want to show you how easy it can be to do the right thing instead of putting your server at risk. I’ll show you how a slightly more complex setup like PXC (compared to MySQL), can be easily achieved without risky shortcuts.

iptables is the utility used to manage the chains of rules used by the Linux kernel firewall, which is your basic security tool.

Linux comes with a wonderful firewall built into the kernel. As an administrator, you can configure this firewall with interfaces like ipchains — which we are not going to cover — and iptables, which we shall talk about.

iptables is stateful, which means that the firewall can make decisions based on received packets. This means that I can, for instance, DROP a packet if it’s coming from bad-guy.com.

I can also create a set of rules that either will allow or reject the package, or that will redirect it to another rule. This potentially can create a very complex scenario.

However, for today and for this use case let’s keep it simple… Looking at my own server:

iptables -v -L

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

250K 29M ACCEPT all -- any any anywhere anywhere state RELATED,ESTABLISHED

6 404 ACCEPT icmp -- any any anywhere anywhere

0 0 ACCEPT all -- lo any anywhere anywhere

9 428 ACCEPT tcp -- any any anywhere anywhere state NEW tcp dpt:ssh

0 0 ACCEPT tcp -- any any anywhere anywhere state NEW tcp dpt:mysql

0 0 ACCEPT tcp -- any any anywhere anywhere

210 13986 REJECT all -- any any anywhere anywhere reject-with icmp-host-prohibited

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 REJECT all -- any any anywhere anywhere reject-with icmp-host-prohibited

Chain OUTPUT (policy ACCEPT 241K packets, 29M bytes)

pkts bytes target prot opt in out source destination

That’s not too bad, my server is currently accepting only SSH and packets on port 3306. Please note that I used the -v option to see more information like IN/OUT and that allows me to identify that actually row #3 is related to my loopback device, and as such it’s good to have it open.

The point is that if I try to run the PXC cluster with these settings it will fail, because the nodes will not be able to see each other.

A quite simple example when try to start the second node of the cluster:

2018-05-21T17:56:14.383686Z 0 [Note] WSREP: (3cb4b3a6, 'tcp://10.0.0.21:4567') connection to peer 584762e6 with addr tcp://10.0.0.23:4567 timed out, no messages seen in PT3S

Starting a new node will fail, given that the connectivity will not be established correctly. In the Percona documentation there is a notes section in which we mention that these ports must be open to have the cluster working correctly.:

- 3306 For MySQL client connections and State Snapshot Transfer that use the mysqldump method.

- 4567 For Galera Cluster replication traffic, multicast replication uses both UDP transport and TCP on this port.

- 4568 For Incremental State Transfer.

- 4444 For all other State Snapshot Transfer.

Of course, if you don’t know how to do it that could be a problem, but it is quite simple. Just use the following commands to add the needed rules:

iptables -I INPUT 2 --protocol tcp --match tcp --dport 3306 --source 10.0.0.1/24 --jump ACCEPT iptables -I INPUT 3 --protocol tcp --match tcp --dport 4567 --source 10.0.0.1/24 --jump ACCEPT iptables -I INPUT 4 --protocol tcp --match tcp --dport 4568 --source 10.0.0.1/24 --jump ACCEPT iptables -I INPUT 5 --protocol tcp --match tcp --dport 4444 --source 10.0.0.1/24 --jump ACCEPT iptables -I INPUT 6 --protocol udp --match udp --dport 4567 --source 10.0.0.1/24 --jump ACCEPT

Once you have done this check the layout again and you should have something like this:

[root@galera1h1n5 gal571]# iptables -L Chain INPUT (policy ACCEPT) target prot opt source destination ACCEPT all -- anywhere anywhere state RELATED,ESTABLISHED ACCEPT tcp -- 10.0.0.0/24 anywhere tcp dpt:mysql ACCEPT tcp -- 10.0.0.0/24 anywhere tcp dpt:tram ACCEPT tcp -- 10.0.0.0/24 anywhere tcp dpt:bmc-reporting ACCEPT tcp -- 10.0.0.0/24 anywhere tcp dpt:krb524 ACCEPT udp -- 10.0.0.0/24 anywhere udp dpt:tram ACCEPT icmp -- anywhere anywhere ACCEPT tcp -- anywhere anywhere tcp dpt:ssh ACCEPT tcp -- anywhere anywhere tcp dpt:mysql REJECT all -- anywhere anywhere reject-with icmp-port-unreachable Chain FORWARD (policy ACCEPT) target prot opt source destination REJECT all -- anywhere anywhere reject-with icmp-port-unreachable Chain OUTPUT (policy ACCEPT) target prot opt source destination

Try to start the secondary node, and — tadaaa — the node will connect, will provision itself, and finally will start correctly.

All good? Well not really, you still need to perform a final step. We need to make our server accessible also for PMM monitoring agents.

You have PMM right? If you don’t take a look here and you will want it.

Anyhow PMM will not work correctly with the rules I have, and the result will be an empty set of graphs when accessing the server statistics. Luckily, PMM has a very easy way to help you identify the issue:

[root@galera1h1n5 gal571]# pmm-admin check-network PMM Network Status Server Address | 192.168.1.52 Client Address | 192.168.1.205 * System Time NTP Server (0.pool.ntp.org) | 2018-05-24 08:05:37 -0400 EDT PMM Server | 2018-05-24 12:05:34 +0000 GMT PMM Client | 2018-05-24 08:05:37 -0400 EDT PMM Server Time Drift | OK PMM Client Time Drift | OK PMM Client to PMM Server Time Drift | OK * Connection: Client --> Server -------------------- ------- SERVER SERVICE STATUS -------------------- ------- Consul API OK Prometheus API OK Query Analytics API OK Connection duration | 1.051724ms Request duration | 311.924µs Full round trip | 1.363648ms * Connection: Client <-- Server -------------- ------------ -------------------- ------- ---------- --------- SERVICE TYPE NAME REMOTE ENDPOINT STATUS HTTPS/TLS PASSWORD -------------- ------------ -------------------- ------- ---------- --------- linux:metrics galera1h1n5 192.168.1.205:42000 DOWN NO NO mysql:metrics gal571 192.168.1.205:42002 DOWN NO NO When an endpoint is down it may indicate that the corresponding service is stopped (run 'pmm-admin list' to verify). If it's running, check out the logs /var/log/pmm-*.log When all endpoints are down but 'pmm-admin list' shows they are up and no errors in the logs, check the firewall settings whether this system allows incoming connections from server to address:port in question. Also you can check the endpoint status by the URL: http://192.168.1.52/prometheus/targets

What you want more? You have all the information to debug and build your new rules. I just need to open the ports 42000 42002 on my firewall:

iptables -I INPUT 7 --protocol tcp --match tcp --dport 42000 --source 192.168.1.1/24 --jump ACCEPT iptables -I INPUT 8 --protocol tcp --match tcp --dport 42002 --source 192.168.1.1/24 --jump ACCEPT

Please note that we are handling the connectivity for PMM using a different range of IPs/subnet. This because it is best practice to have PXC nodes communicate to a dedicated network/subnet (physical and logical).

Run the test again:

* Connection: Client <-- Server -------------- ------------ -------------------- ------- ---------- --------- SERVICE TYPE NAME REMOTE ENDPOINT STATUS HTTPS/TLS PASSWORD -------------- ------------ -------------------- ------- ---------- --------- linux:metrics galera1h1n5 192.168.1.205:42000 OK YES YES mysql:metrics gal571 192.168.1.205:42002 OK YES YES

Done … I just repeat this on all my nodes and I will have set my firewall to handle the PXC related security.

Now that all my settings are working well I can save my firewall’s rules:

iptables-save > /etc/sysconfig/iptables

For Ubuntu you may need some additional steps as for (https://help.ubuntu.com/community/IptablesHowTo#Using_iptables-save.2Frestore_to_test_rules)

There are some nice tools to help you even more, if you are very lazy, like UFW and the graphical one, GUFW. Developed to ease iptables firewall configuration, ufw provides a user friendly way to create an IPv4 or IPv6 host-based firewall. By default UFW is disabled in Ubuntu. Given that ultimately they use iptables, and their use is widely covered in other resources such as the official Ubuntu documentation, I won’t cover these here.

Conclusion

Please don’t make the mistake of flushing/ignoring your firewall, when to make this right is just a matter of 5 commands. It’s easy enough to be done by everyone and it’s good enough to stop the basic security attacks.

Happy MySQL (and PXC) to everyone.

The post PXC loves firewalls (and System Admins loves iptables) appeared first on Percona Database Performance Blog.

Webinar Weds 6/13: Performance Analysis and Troubleshooting Methodologies for Databases

Please join Percona’s CEO, Peter Zaitsev as he presents Performance Analysis and Troubleshooting Methodologies for Databases on Wednesday, June 13th, 2018 at 11:00 AM PDT (UTC-7) / 2:00 PM EDT (UTC-4).

Please join Percona’s CEO, Peter Zaitsev as he presents Performance Analysis and Troubleshooting Methodologies for Databases on Wednesday, June 13th, 2018 at 11:00 AM PDT (UTC-7) / 2:00 PM EDT (UTC-4).

Have you heard about the USE Method (Utilization – Saturation – Errors)? RED (Rate – Errors – Duration), or Golden Signals (Latency – Traffic – Errors – Saturations)?

In this presentation, we will talk briefly about these different-but-similar “focuses”. We’ll discuss how we can apply them to data infrastructure performance analysis, troubleshooting, and monitoring.

We will use MySQL as an example, but most of this talk applies to other database technologies too.

About Peter Zaitsev, CEO

Peter Zaitsev co-founded Percona and assumed the role of CEO in 2006. As one of the foremost experts on MySQL strategy and optimization, Peter leveraged both his technical vision and entrepreneurial skills to grow Percona from a two-person shop to one of the most respected open source companies in the business. With over 140 professionals in 30 plus countries, Peter’s venture now serves over 3000 customers – including the “who’s who” of internet giants, large enterprises and many exciting startups. Percona was named to the Inc. 5000 in 2013, 2014, 2015 and 2016.

Peter was an early employee at MySQL AB, eventually leading the company’s High Performance Group. A serial entrepreneur, Peter co-founded his first startup while attending Moscow State University where he majored in Computer Science. Peter is a co-author of High Performance MySQL: Optimization, Backups, and Replication, one of the most popular books on MySQL performance. Peter frequently speaks as an expert lecturer at MySQL and related conferences, and regularly posts on the Percona Database Performance Blog. He has also been tapped as a contributor to Fortune and DZone, and his recent ebook Practical MySQL Performance Optimization Volume 1 is one of percona.com’s most popular downloads. Peter lives in North Carolina with his wife and two children. In his spare time, Peter enjoys travel and spending time outdoors.

The post Webinar Weds 6/13: Performance Analysis and Troubleshooting Methodologies for Databases appeared first on Percona Database Performance Blog.

MySQL 8.0: Optimizing Small Partial Update of LOB in InnoDB

In this article I will explain the partial update optimizations for smaller (LOBs) in InnoDB. Small here qualifies the size of the modification and not the size of the LOB. For some background information about the partial update feature, kindly go through our previous posts on this (here, here and here).…

How to Benchmark Performance of MySQL & MariaDB using SysBench

What is SysBench? If you work with MySQL on a regular basis, then you most probably have heard of it. SysBench has been in the MySQL ecosystem for a long time. It was originally written by Peter Zaitsev, back in 2004. Its purpose was to provide a tool to run synthetic benchmarks of MySQL and the hardware it runs on. It was designed to run CPU, memory and I/O tests. It had also an option to execute OLTP workload on a MySQL database. OLTP stands for online transaction processing, typical workload for online applications like e-commerce, order entry or financial transaction systems.

In this blog post, we will focus on the SQL benchmark feature but keep in mind that hardware benchmarks can also be very useful in identifying issues on database servers. For example, I/O benchmark was intended to simulate InnoDB I/O workload while CPU tests involve simulation of highly concurrent, multi-treaded environment along with tests for mutex contentions - something which also resembles a database type of workload.

SysBench history and architecture

As mentioned, SysBench was originally created in 2004 by Peter Zaitsev. Soon after, Alexey Kopytov took over its development. It reached version 0.4.12 and the development halted. After a long break Alexey started to work on SysBench again in 2016. Soon version 0.5 has been released with OLTP benchmark rewritten to use LUA-based scripts. Then, in 2017, SysBench 1.0 was released. This was like day and night compared to the old, 0.4.12 version. First and the foremost, instead of hardcoded scripts, now we have the ability to customize benchmarks using LUA. For instance, Percona created TPCC-like benchmark which can be executed using SysBench. Let’s take a quick look at the current SysBench architecture.

SysBench is a C binary which uses LUA scripts to execute benchmarks. Those scripts have to:

- Handle input from command line parameters

- Define all of the modes which the benchmark is supposed to use (prepare, run, cleanup)

- Prepare all of the data

- Define how the benchmark will be executed (what queries will look like etc)

Scripts can utilize multiple connections to the database, they can also process results should you want to create complex benchmarks where queries depend on the result set of previous queries. With SysBench 1.0 it is possible to create latency histograms. It is also possible for the LUA scripts to catch and handle errors through error hooks. There’s support for parallelization in the LUA scripts, multiple queries can be executed in parallel, making, for example, provisioning much faster. Last but not least, multiple output formats are now supported. Before SysBench generated only human-readable output. Now it is possible to generate it as CSV or JSON, making it much easier to do post-processing and generate graphs using, for example, gnuplot or feed the data into Prometheus, Graphite or similar datastore.

Why SysBench?

The main reason why SysBench became popular is the fact that it is simple to use. Someone without prior knowledge can start to use it within minutes. It also provides, by default, benchmarks which cover most of the cases - OLTP workloads, read-only or read-write, primary key lookups and primary key updates. All which caused most of the issues for MySQL, up to MySQL 8.0. This was also a reason why SysBench was so popular in different benchmarks and comparisons published on the Internet. Those posts helped to promote this tool and made it into the go-to synthetic benchmark for MySQL.

Another good thing about SysBench is that, since version 0.5 and incorporation of LUA, anyone can prepare any kind of benchmark. We already mentioned TPCC-like benchmark but anyone can craft something which will resemble her production workload. We are not saying it is simple, it will be most likely a time-consuming process, but having this ability is beneficial if you need to prepare a custom benchmark.

Being a synthetic benchmark, SysBench is not a tool which you can use to tune configurations of your MySQL servers (unless you prepared LUA scripts with custom workload or your workload happen to be very similar to the benchmark workloads that SysBench comes with). What it is great for is to compare performance of different hardware. You can easily compare performance of, let’s say, different type of nodes offered by your cloud provider and maximum QPS (queries per second) they offer. Knowing that metric and knowing what you pay for given node, you can then calculate even more important metric - QP$ (queries per dollar). This will allow you to identify what node type to use when building a cost-efficient environment. Of course, SysBench can be used also for initial tuning and assessing feasibility of a given design. Let’s say we build a Galera cluster spanning across the globe - North America, EU, Asia. How many inserts per second can such a setup handle? What would be the commit latency? Does it even make sense to do a proof of concept or maybe network latency is high enough that even a simple workload does not work as you would expect it to.