The Question

Recently, a customer asked us:

How would we manually move the relay role from a failing node to a slave in a Composite Tungsten Cluster passive site?

The Answer

The Long and the Short of It

There are two ways to handle this procedure. One is short and reasonably automated, and the other is much more detailed and manual.

SHORT

Below is the list of cctrl commands that would be run for the basic, short version, which (aside from handling policy changes) is really only three key commands:

use west

set policy maintenance

datasource db4 fail

failover

recover

set policy automatic

LONG

Below is the list of cctrl commands that would be run for the extended, manual version:

use west

set policy maintenance

datasource db6 shun

datasource db4 offline

datasource db4 relay

replicator db5 offline

replicator db5 slave db4

replicator db5 online

replicator db6 offline

replicator db6 slave db4

replicator db6 online

datasource db6 slave

datasource db6 welcome

datasource db6 online

set policy automatic

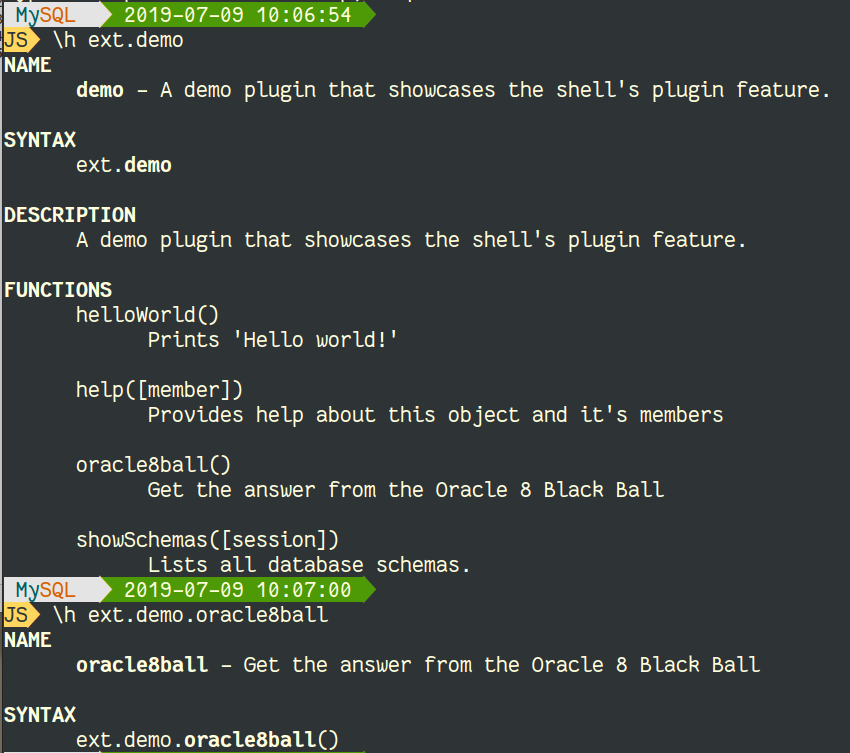

Full Procedure for SHORT (Automatic)

First, enable Maintenance mode to keep the Manager from interfering:

shell> cctrl

[LOGICAL] /west > set policy maintenance

policy mode is now MAINTENANCE

Tell the cluster that node db4 is failed using the datasource {node} fail command:

[LOGICAL] /west > datasource db4 fail

WARNING: This is an expert-level command:

Incorrect use may cause data corruption

or make the cluster unavailable.

Do you want to continue? (y/n)> y

DataSource 'db4@west' set to FAILED

Here is the state of all nodes after failing node db4:

[LOGICAL] /west > ls

DATASOURCES:

+---------------------------------------------------------------------------------+

|db4(relay:FAILED(MANUALLY-FAILED), progress=243050, latency=0.523) |

|STATUS [CRITICAL] [2019/07/12 02:57:50 PM UTC] |

|REASON[MANUALLY-FAILED] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=relay, master=db1, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db5(slave:ONLINE, progress=242883, latency=0.191) |

|STATUS [OK] [2019/07/12 02:57:01 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=slave, master=db4, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db6(slave:ONLINE, progress=242889, latency=0.309) |

|STATUS [OK] [2019/07/12 02:57:01 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=slave, master=db4, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=0, active=0) |

+---------------------------------------------------------------------------------+

Next, tell the cluster to pick a new relay automatically using the failover command:

[LOGICAL] /west > failover

SELECTED SLAVE: db5@west

SET POLICY: MAINTENANCE => MAINTENANCE

Savepoint failover_0(cluster=west, source=db4.continuent.com, created=2019/07/12 14:58:56 UTC) created

PRIMARY IS REMOTE. USING 'thls://db1:2112/' for the MASTER URI

SHUNNING PREVIOUS MASTER 'db4@west'

PUT THE NEW RELAY 'db5@west' ONLINE

FAILOVER TO 'db5' WAS COMPLETED

Here is the state of all nodes after performing the failover:

[LOGICAL] /west > ls

DATASOURCES:

+---------------------------------------------------------------------------------+

|db4(relay:SHUNNED(FAILED-OVER-TO-db5), progress=249356, latency=0.698) |

|STATUS [SHUNNED] [2019/07/12 02:58:56 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=relay, master=db1, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db5(relay:ONLINE, progress=249404, latency=0.326) |

|STATUS [OK] [2019/07/12 02:59:01 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=relay, master=db1, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db6(slave:ONLINE, progress=249422, latency=0.584) |

|STATUS [OK] [2019/07/12 02:59:01 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=slave, master=db5, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=0, active=0) |

+---------------------------------------------------------------------------------+

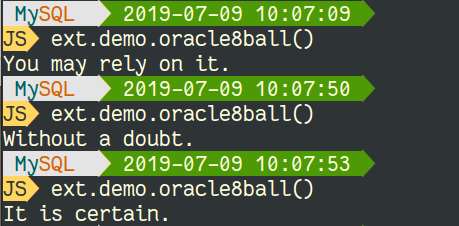

Now “fix” the db4 node and welcome it back into the cluster using the recover command:

[LOGICAL] /west > recover

RECOVERING DATASERVICE 'west

FOUND PHYSICAL DATASOURCE TO RECOVER: 'db4@west'

RECOVERING DATASOURCE 'db4@west'

VERIFYING THAT WE CAN CONNECT TO DATA SERVER 'db4'

Verified that DB server notification 'db4' is in state 'ONLINE'

DATA SERVER 'db4' IS NOW AVAILABLE FOR CONNECTIONS

RECOVERING 'db4@west' TO A SLAVE USING 'db5@west' AS THE MASTER

SETTING THE ROLE OF DATASOURCE 'db4@west' FROM 'relay' TO 'slave'

RECOVERY OF DATA SERVICE 'west' SUCCEEDED

RECOVERED 1 DATA SOURCES IN SERVICE 'west'

Here is the state of all nodes after performing the recover command:

[LOGICAL] /west > ls

DATASOURCES:

+---------------------------------------------------------------------------------+

|db5(relay:ONLINE, progress=252074, latency=0.365) |

|STATUS [OK] [2019/07/12 02:59:01 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=relay, master=db1, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db4(slave:ONLINE, progress=252249, latency=0.726) |

|STATUS [OK] [2019/07/12 02:59:57 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=slave, master=db5, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db6(slave:ONLINE, progress=252093, latency=0.639) |

|STATUS [OK] [2019/07/12 02:59:01 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=slave, master=db5, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=0, active=0) |

+---------------------------------------------------------------------------------+

Finally, return the cluster to Automatic mode so the Manager will detect problems and react automatically.

[LOGICAL] /west > set policy automatic

policy mode is now AUTOMATIC

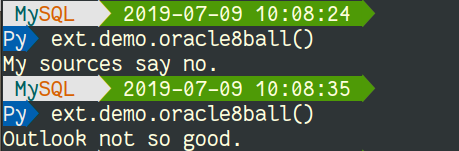

Full Procedure for LONG (Manual)

In the below example, node db6 is the current relay with db4 and db5 as slaves.

To force a current slave (i.e. db4) to become a relay to take over from db6 manually, follow the below example:

[LOGICAL] /west >set policy maintenance

STEP 1. Shun node db6, the current relay

Tell the cluster to shun the current relay node db6 using the datasource {node} shun command:

[LOGICAL] /west > datasource db6 shun

WARNING: This is an expert-level command:

Incorrect use may cause data corruption

or make the cluster unavailable.

Do you want to continue? (y/n)> y

DataSource 'db6@west' set to SHUNNED

Here is the state of all nodes after performing the shun command:

[LOGICAL] /west > ls

DATASOURCES:

+---------------------------------------------------------------------------------+

|db6(relay:SHUNNED(MANUALLY-SHUNNED), progress=28973, latency=0.649) |

|STATUS [SHUNNED] [2019/07/12 02:06:08 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=relay, master=db1, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=0, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db4(slave:ONLINE, progress=28909, latency=0.707) |

|STATUS [OK] [2019/07/08 12:54:37 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=slave, master=db6, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db5(slave:ONLINE, progress=28965, latency=0.570) |

|STATUS [OK] [2019/07/08 12:54:43 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=slave, master=db6, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

STEP 2. Process node db4, the new relay

Take datasource db4 offline and change the role to relay:

[LOGICAL] /west > datasource db4 offline

DataSource 'db4@west' is now OFFLINE

[LOGICAL] /west > datasource db4 relay

WARNING: This is an expert-level command:

Incorrect use may cause data corruption

or make the cluster unavailable.

Do you want to continue? (y/n)> y

VERIFYING THAT WE CAN CONNECT TO DATA SERVER 'db4'

Verified that DB server notification 'db4' is in state 'ONLINE'

DATA SERVER 'db4' IS NOW AVAILABLE FOR CONNECTIONS

PRIMARY IS REMOTE. USING 'thls://db1:2112/' for the MASTER URI

REPLICATOR 'db4' IS NOW USING MASTER CONNECT URI 'thls://db1:2112/'

Replicator 'db4@west' is now ONLINE

DataSource 'db4@west' is now OFFLINE

DATASOURCE 'db4@west' IS NOW A RELAY

Here is the state of all nodes after performing the offline and relay commands:

[LOGICAL] /west > ls

DATASOURCES:

+---------------------------------------------------------------------------------+

|db4(relay:ONLINE, progress=34071, latency=0.869) |

|STATUS [OK] [2019/07/12 02:06:55 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=relay, master=db1, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db6(relay:SHUNNED(MANUALLY-SHUNNED), progress=34133, latency=0.708) |

|STATUS [SHUNNED] [2019/07/12 02:06:08 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=relay, master=db1, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=0, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db5(slave:ONLINE, progress=34125, latency=0.649) |

|STATUS [OK] [2019/07/08 12:54:43 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=slave, master=db6, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

STEP 3. Process node db5, a slave

Tell the replicator on db5 to go offline, then configure it to be a slave of new relay node db4, and then bring it right back online:

[LOGICAL] /west > replicator db5 offline

Replicator 'db5' is now OFFLINE

[LOGICAL] /west > replicator db5 slave db4

WARNING: This is an expert-level command:

Incorrect use may cause data corruption

or make the cluster unavailable.

Do you want to continue? (y/n)> y

Replicator 'db5' is now a slave of replicator 'db4'

[LOGICAL] /west > replicator db5 online

Replicator 'db5@west' set to go ONLINE

Here is the state of all nodes after performing the commands:

[LOGICAL] /west > ls

DATASOURCES:

+---------------------------------------------------------------------------------+

|db4(relay:ONLINE, progress=70120, latency=0.637) |

|STATUS [OK] [2019/07/12 02:06:55 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=relay, master=db1, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db6(relay:SHUNNED(MANUALLY-SHUNNED), progress=70189, latency=0.557) |

|STATUS [SHUNNED] [2019/07/12 02:06:08 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=relay, master=db1, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=0, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db5(slave:ONLINE, progress=70177, latency=0.456) |

|STATUS [OK] [2019/07/08 12:54:43 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=slave, master=db4, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

STEP 4. Process node db6, the OLD relay and configure it to be a slave of db4, the NEW relay

Bring the replicator on node db6 offline:

[LOGICAL] /west > replicator db6 offline

Replicator 'db6' is now OFFLINE

Change the role of the replicator on node db6 to a slave of node db4 (the new relay):

[LOGICAL] /west > replicator db6 slave db4

WARNING: This is an expert-level command:

Incorrect use may cause data corruption

or make the cluster unavailable.

Do you want to continue? (y/n)> y

Replicator 'db6' is now a slave of replicator 'db4'

Bring the replicator on node db6 online:

[LOGICAL] /west > replicator db6 online

Replicator 'db6@west' set to go ONLINE

Change the role of datasource db6 to a slave:

[LOGICAL] /west > datasource db6 slave

WARNING: This is an expert-level command:

Incorrect use may cause data corruption

or make the cluster unavailable.

Do you want to continue? (y/n)> y

Datasource 'db6' now has role 'slave'

Welcome datasource db6 back into the cluster. Once welcomed, it will be in the OFFLINE state.

[LOGICAL] /west > datasource db6 welcome

WARNING: This is an expert-level command:

Incorrect use may cause data corruption

or make the cluster unavailable.

Do you want to continue? (y/n)> y

DataSource 'db6@west' is now OFFLINE

Bring datasource db6 online:

[LOGICAL] /west > datasource db6 online

Setting server for data source 'db6' to READ-ONLY

+---------------------------------------------------------------------------------+

|db6 |

+---------------------------------------------------------------------------------+

|Variable_name Value |

|read_only ON |

+---------------------------------------------------------------------------------+

DataSource 'db6@west' is now ONLINE

At this point the cluster is completely back to healthy and in the desired configuration:

[LOGICAL] /west > ls

DATASOURCES:

+---------------------------------------------------------------------------------+

|db4(relay:ONLINE, progress=104957, latency=0.440) |

|STATUS [OK] [2019/07/12 02:06:55 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=relay, master=db1, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db5(slave:ONLINE, progress=105014, latency=0.242) |

|STATUS [OK] [2019/07/08 12:54:43 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=slave, master=db4, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=2, active=0) |

+---------------------------------------------------------------------------------+

+---------------------------------------------------------------------------------+

|db6(slave:ONLINE, progress=105018, latency=0.300) |

|STATUS [OK] [2019/07/12 02:20:10 PM UTC] |

+---------------------------------------------------------------------------------+

| MANAGER(state=ONLINE) |

| REPLICATOR(role=slave, master=db4, state=ONLINE) |

| DATASERVER(state=ONLINE) |

| CONNECTIONS(created=0, active=0) |

+---------------------------------------------------------------------------------+

Finally, return the cluster to Automatic mode so the Manager will detect problems and react automatically.

[LOGICAL] /west > set policy automatic

policy mode is now AUTOMATIC

The Library

Please read the docs!

For more information about using various cctrl commands, please visit the docs page at https://docs.continuent.com/tungsten-clustering-6.0/cmdline-tools-cctrl-commands.html

For more information about Tungsten clusters, please visit https://docs.continuent.com.

Summary

The Wrap-Up

In this blog post we discussed two ways to move the relay role to another node in a Composite Tungsten Cluster.

Tungsten Clustering is the most flexible, performant global database layer available today – use it underlying your SaaS offering as a strong base upon which to grow your worldwide business!

For more information, please visit https://www.continuent.com/solutions

Want to learn more or run a POC? Contact us.