A MySQL customer of FromDual has different manufacturing plants spread across the globe. They are operated by local companies. FromDuals customer wants to maintain the manufacturing receipts centralized in a MySQL database in the Head Quarter in Europe. Each manufacturing plant should only see their specific data.

![Worldwide process controlling with MySQL gtid_replication_customer.png]()

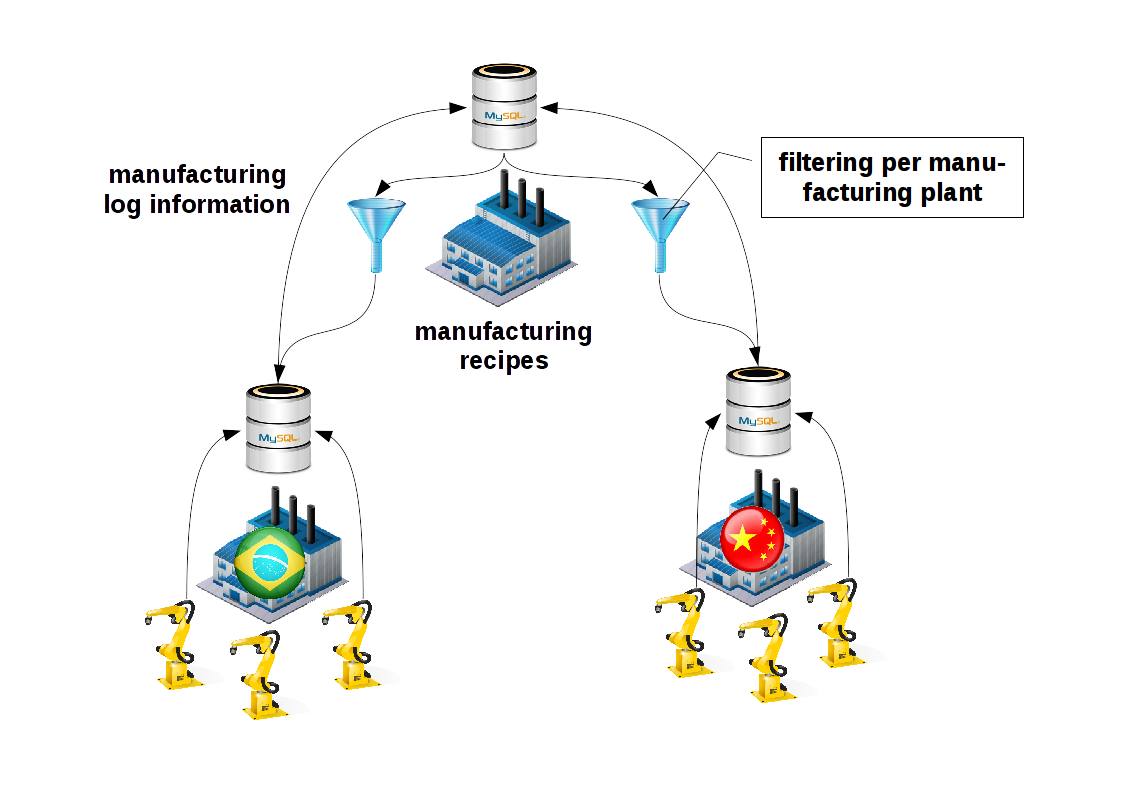

Manufacturing log information should be reported backup to European Head Quarter MySQL database.

The process was designed as follows:

![Distribution and filtering of data with MySQL gtid_replication_production_plant.png]()

Preparation of Proof of Concept (PoC)

To simulate all cases we need different schemas. Some which should be replicated, some which should NOT be replicated:

CREATE DATABASE finance;

CREATE TABLE finance.accounting (

`id` int(10) unsigned NOT NULL AUTO_INCREMENT,

`data` varchar(255) DEFAULT NULL,

`ts` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`),

KEY `data_rename` (`data`)

);

CREATE DATABASE crm;

CREATE TABLE crm.customer (

`id` int(10) unsigned NOT NULL AUTO_INCREMENT,

`data` varchar(255) DEFAULT NULL,

`ts` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`),

KEY `data_rename` (`data`)

);

CREATE DATABASE erp;

-- Avoid specifying Storage Engine here!!!

CREATE TABLE erp.manufacturing_data (

id INT UNSIGNED NOT NULL AUTO_INCREMENT

, manufacture_plant VARCHAR(32)

, manufacture_info VARCHAR(255)

, PRIMARY KEY (id)

, KEY (manufacture_plant)

);

CREATE TABLE erp.manufacturing_log (

id INT UNSIGNED NOT NULL AUTO_INCREMENT

, manufacture_plant VARCHAR(32)

, log_data VARCHAR(255)

, PRIMARY KEY (id)

, KEY (manufacture_plant)

);

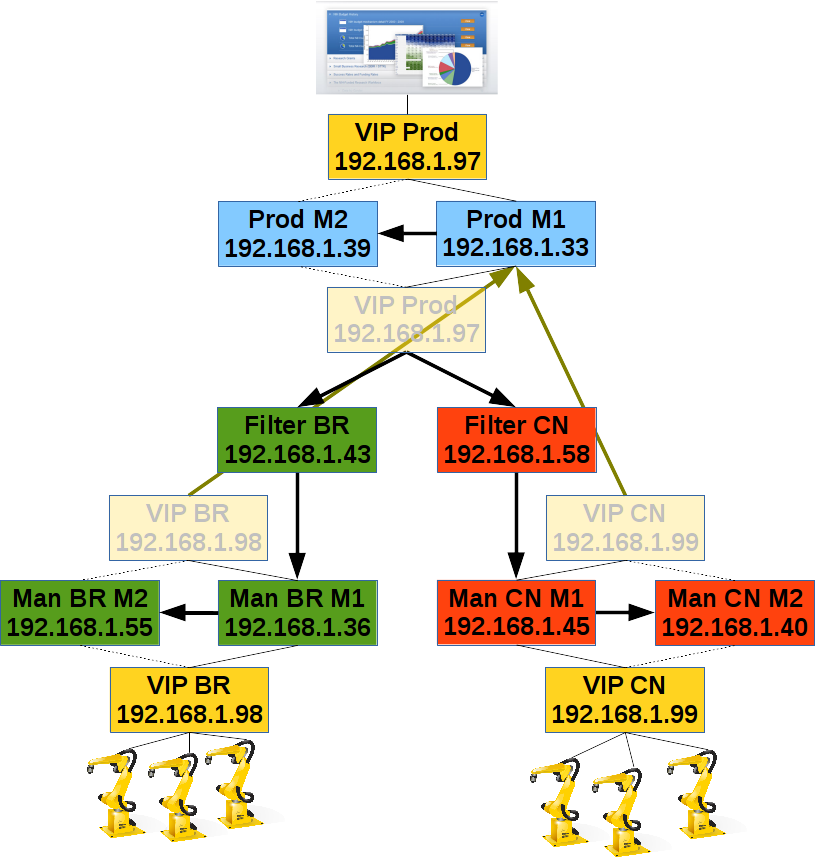

MySQL replication architecture

Before you start with such complicated MySQL set-ups it is recommended to make a little sketch of what you want to build:

![Design of MySQL databses gtid_replication_master_slave.png]()

Preparing the Production Master database (Prod M1)

To make use of all the new and cool features of MySQL we used the new GTID replication. First we set up a Master (Prod M1) and its fail-over System (Prod M2) in the customers Head Quarter:

# /etc/my.cnf

[mysqld]

binlog_format = row # optional

log_bin = binary-log # mandatory, also on Slave!

log_slave_updates = on # mandatory

gtid_mode = on # mandatory

enforce_gtid_consistency = on # mandatory

server-id = 39 # mandatory

This step requires a system restart (one minute downtime).

Preparing the Production Master standby database (Prod M2)

On Master (Prod M1):

GRANT REPLICATION SLAVE ON *.* TO 'replication'@'192.168.1.%' IDENTIFIED BY 'secret';

mysqldump -u root --set-gtid-purged=on --master-data=2 --all-databases --triggers --routines --events > /tmp/full_dump.sql

On Slave (Prod M2):

CHANGE MASTER TO MASTER_HOST='192.168.1.39', MASTER_PORT=3306

, MASTER_USER='replication', MASTER_PASSWORD='secret'

, MASTER_AUTO_POSITION=1;

RESET MASTER; -- On SLAVE!

system mysql -u root < /tmp/full_dump.sql

START SLAVE;

To make it easier for a Slave to connect to its master we set a VIP in front of those 2 database servers (VIP Prod). This VIP should be used by all applications in the head quarter and also the filter engines.

Set-up filter engines (Filter BR and Filter CN)

To make sure every manufacturing plant sees only the data it is allowed to see we need a filtering engine between the production site and the manufacturing plant (Filter BR and Filter CN).

To keep this filter engine lean we use a MySQL instance with all tables converted to the Blackhole Storage Engine:

# /etc/my.cnf

[mysqld]

binlog_format = row # optional

log_bin = binary-log # mandatory, also on Slave!

log_slave_updates = on # mandatory

gtid_mode = on # mandatory

enforce_gtid_consistency = on # mandatory

server-id = 36 # mandatory

default_storage_engine = blackhole

On the production master (Prod M1) we get the data as follows:

mysqldump -u root --set-gtid-purged=on --master-data=2 --triggers --routines --events --no-data --databases erp > /tmp/erp_dump_nd.sql

The Filter Engines (Filter BR and CN) are set-up as follows::

-- Here we can use the VIP!

CHANGE MASTER TO master_host='192.168.1.33', master_port=3306

, master_user='replication', master_password='secret'

, master_auto_position=1;

RESET MASTER; -- On SLAVE!

system cat /tmp/erp_dump_nd.sql | sed 's/ ENGINE=[a-zA-Z]*/ ENGINE=blackhole/' | mysql -u root

START SLAVE;

Do not forget to also create the replication user on the filter engines.

GRANT REPLICATION SLAVE ON *.* TO 'replication'@'192.168.1.%' IDENTIFIED BY 'secret';

Filtering out all non ERP schemata

We only want the erp schema to be replicated to the manufacturing plants, not the crm or the finance application. This we achieve with the following option on the filter engines:

# /etc/my.cnf

[mysqld]

replicate_do_db = erp

replicate_ignore_table = erp.manufacturing_log

MySQL row filtering

To achieve row filtering we use TRIGGERS. Make sure they are not replicated further down the hierarchy:

SET SESSION SQL_LOG_BIN = 0;

use erp

DROP TRIGGER IF EXISTS filter_row;

delimiter //

CREATE TRIGGER filter_row

BEFORE INSERT ON manufacturing_data

FOR EACH ROW

BEGIN

IF ( NEW.manufacture_plant != 'China' ) THEN

SIGNAL SQLSTATE '45000'

SET MESSAGE_TEXT = 'Row was filtered out.'

, CLASS_ORIGIN = 'FromDual filter trigger'

, SUBCLASS_ORIGIN = 'filter_row'

, CONSTRAINT_SCHEMA = 'erp'

, CONSTRAINT_NAME = 'filer_row'

, SCHEMA_NAME = 'erp'

, TABLE_NAME = 'manufacturing_data'

, COLUMN_NAME = ''

, MYSQL_ERRNO = 1644

;

END IF;

END;

//

delimiter ;

SET SESSION SQL_LOG_BIN = 0;Up to now this would cause to stop replication for every filtered row. To avoid this we tell the Filtering Slaves to skip this error number:

# /etc/my.cnf

[mysqld]

slave_skip_errors = 1644

Attaching production manufacturing Slaves (Man BR M1 and Man CN M1)

When we have finished everything on our head quarter site. We can start with the manufacturing sites (BR and CN):

On Master (Prod M1):

mysqldump -u root --set-gtid-purged=on --master-data=2 --triggers --routines --events --where='manufacture_plant="Brazil"' --databases erp > /tmp/erp_dump_br.sql

mysqldump -u root --set-gtid-purged=on --master-data=2 --triggers --routines --events --where='manufacture_plant="China"' --databases erp > /tmp/erp_dump_cn.sql

On the Manufacturing Masters (Man BR M1 and Man BR M2). Here we do NOT use a VIP because we think a blackhole storage engine is robust enough as master:

CHANGE MASTER TO master_host='192.168.1.43', master_port=3306

, master_user='replication', master_password='secret'

, master_auto_position=1;

RESET MASTER; -- On SLAVE!

system cat /tmp/erp_dump_br.sql | mysql -u root

START SLAVE;

The standby manufacturing (Man BR M2 and Man CN M2) database is created in the same way as the production manufacturing database on the master.

Testing replication from HQ to manufacturing plants

First we make sure, crm and finance is not replicated out and replication also does not stop (on Prod M1):

INSERT INTO finance.accounting VALUES (NULL, 'test data over VIP', NULL);

INSERT INTO finance.accounting VALUES (NULL, 'test data over VIP', NULL);

INSERT INTO crm.customer VALUES (NULL, 'test data over VIP', NULL);

INSERT INTO crm.customer VALUES (NULL, 'test data over VIP', NULL);

UPDATE finance.accounting SET data = 'Changed data';

UPDATE crm.customer SET data = 'Changed data';

DELETE FROM finance.accounting WHERE id = 1;

DELETE FROM crm.customer WHERE id = 1;

SELECT * FROM finance.accounting;

SELECT * FROM crm.customer;

SHOW SLAVE STATUS\G

The schema filter seems to work correctly. Then we check if also the row filter works correctly. For this we have to run the queries in statement based replication (SBR)! Otherwise the trigger would not fire:

use mysql

INSERT INTO erp.manufacturing_data VALUES (NULL, 'China', 'Highly secret manufacturing info as RBR.');

INSERT INTO erp.manufacturing_data VALUES (NULL, 'Brazil', 'Highly secret manufacturing info as RBR.');

-- This needs SUPER privilege... :-(

SET SESSION binlog_format = STATEMENT;

-- Caution those rows will NOT be replicated!!!

-- See filter rules for SBR

INSERT INTO erp.manufacturing_data VALUES (NULL, 'China', 'Highly secret manufacturing info as SBR lost.');

INSERT INTO erp.manufacturing_data VALUES (NULL, 'Brazil', 'Highly secret manufacturing info as SBR lost.');

use erp

INSERT INTO manufacturing_data VALUES (NULL, 'China', 'Highly secret manufacturing info as SBR.');

INSERT INTO manufacturing_data VALUES (NULL, 'Brazil', 'Highly secret manufacturing info as SBR.');

INSERT INTO manufacturing_data VALUES (NULL, 'Germany', 'Highly secret manufacturing info as SBR.');

INSERT INTO manufacturing_data VALUES (NULL, 'Switzerland', 'Highly secret manufacturing info as SBR.');

SET SESSION binlog_format = ROW;

SELECT * FROM erp.manufacturing_data;

Production data back to head quarter

Now we have to take care about the production data on their way back to the HQ. To achieve this we use the new MySQL 5.7 feature called multi source replication. For multi source replication the replication repositories must be kept in tables instead of files:

# /etc/my.cnf

[mysqld]

master_info_repository = TABLE # mandatory

relay_log_info_repository = TABLE # mandatory

Then we have to configure 2 replication channels from Prod M1 to their specific manufacturing masters over the VIP (VIP BR and VIP CN):

CHANGE MASTER TO MASTER_HOST='192.168.1.98', MASTER_PORT=3306

, MASTER_USER='replication', MASTER_PASSWORD='secret'

, MASTER_AUTO_POSITION=1

FOR CHANNEL "manu_br";

CHANGE MASTER TO MASTER_HOST='192.168.1.99', MASTER_PORT=3306

, MASTER_USER='replication', MASTER_PASSWORD='secret'

, MASTER_AUTO_POSITION=1

FOR CHANNEL "manu_cn";

START SLAVE FOR CHANNEL 'manu_br';

START SLAVE FOR CHANNEL 'manu_cn';

SHOW SLAVE STATUS FOR CHANNEL 'manu_br'\G

SHOW SLAVE STATUS FOR CHANNEL 'manu_cn'\G

Avoid to configure and activate the channels on Prod M2 as well.

Testing back replication from manufacturing plants

Brazil on Man BR M1:

INSERT INTO manufacturing_log VALUES (1, 'Production data from Brazil', 'data');

China on Man CN M1:

INSERT INTO manufacturing_log VALUES (2, 'Production data from China', 'data');

For testing:

SELECT * FROM manufacturing_log;

Make sure you do not run into conflicts (Primary Key, AUTO_INCREMENTS). Make sure filtering is defined correctly!

To check the different channel states you can use the following command:

SHOW SLAVE STATUS\G

or

SELECT ras.channel_name, ras.service_state AS 'SQL_thread', ras.remaining_delay

, CONCAT(user, '@', host, ':', port) AS user

, rcs.service_state AS IO_thread, REPLACE(received_transaction_set, '\n', '') AS received_transaction_set

FROM performance_schema.replication_applier_status AS ras

JOIN performance_schema.replication_connection_configuration AS rcc ON rcc.channel_name = ras.channel_name

JOIN performance_schema.replication_connection_status AS rcs ON ras.channel_name = rcs.channel_name

;Troubleshooting

Inject empty transaction

If you try to skip a transaction as you did earlier (SQL_SLAVE_SKIP_COUNTER) you will face some problems:

STOP SLAVE;

ERROR 1858 (HY000): sql_slave_skip_counter can not be set when the server is running with @@GLOBAL.GTID_MODE = ON. Instead, for each transaction that you want to skip, generate an empty transaction with the same GTID as the transaction

To skip the next transaction you have find the ones applied so far:

SHOW SLAVE STATUS\G

...

Executed_Gtid_Set: c3611091-f80e-11e4-99bc-28d2445cb2e9:1-20

then tell MySQL to skip this by injecting a new empty transaction:

SET SESSION GTID_NEXT='c3611091-f80e-11e4-99bc-28d2445cb2e9:21';

BEGIN;

COMMIT;

SET SESSION GTID_NEXT='AUTOMATIC';

SHOW SLAVE STATUS\G

...

Executed_Gtid_Set: c3611091-f80e-11e4-99bc-28d2445cb2e9:1-21

START SLAVE;

Revert from GTID-based replication to file/position-based replication

If you want to fall-back from MySQL GTID-based replication to file/position-based replication this is quite simple:

CHANGE MASTER TO MASTER_AUTO_POSITION = 0;

MySQL Support and Engineering

If you need some help or support our MySQL support and engineering team is happy to help you.