If you have used MySQL for a while, you have probably used mysqldump to backup your database. In part one of this blog, I am going to show you how to create a simple full and partial backup using mysqldump. In part two, I will show you how to use MySQL Enterprise Backup (which is the successor to the InnoDB Hot Backup product). MySQL Enterprise Backup allows you to backup your database while it is online and it keeps the database available to users during backup operations (you don’t have to take the database offline or lock any databases/tables).

This post will deal with mysqldump. For those of you that aren’t familiar with mysqldump:

The mysqldump client is a utility that performs logical backups, producing a set of SQL statements that can be run to reproduce the original schema objects, table data, or both. It dumps one or more MySQL database for backup or transfer to another SQL server. The mysqldump command can also generate output in CSV, other delimited text, or XML format.

The best feature about mysqldump is that it is easy to use. The main problem with using mysqldump occurs when you need to restore a database. When you execute mysqldump, the database backup (output) is an SQL file that contains all of the necessary SQL statements to restore the database – but restoring requires that you execute these SQL statements to essentially rebuild the database. Since you are recreating your database, the tables and all of your data from this file, the restoration procedure can take a long time to execute if you have a very large database.

There are a lot of features and options with mysqldump – (a complete list is here). I won’t review all of the features, but I will explain some of the ones that I use.

If you have InnoDB tables (InnoDB is the default storage engine as of MySQL 5.5 – replacing MyISAM), when you use mysqldump you will want to use the option –single-transaction or issue the command FLUSH TABLES WITH READ LOCK; in a separate terminal window before you use mysqldump. You will need to release the lock after the dump has completed with the UNLOCK TABLES; command. Either option (–single-transaction or FLUSH TABLES WITH READ LOCK;) acquires a global read lock on all tables at the beginning of the dump. As soon as this lock has been acquired, the binary log coordinates are read and the lock is released. If long-updating statements are running when the FLUSH statement is issued, the MySQL server may get stalled until those statements finish. After that, the dump becomes lock-free and does not disturb reads and writes on the tables. If the update statements that the MySQL server receives are short (in terms of execution time), the initial lock period should not be noticeable, even with many updates.

(from http://dev.mysql.com/doc/refman/5.6/en/mysqldump.html)

Here is the command to use mysqldump to simply backup all of your databases (assuming you have InnoDB tables). This command will create a dump (backup) file named all_databases.sql.

mysqldump --all-databases --single-transaction --user=root --pass > all_databases.sql

After you hit return, you will have to enter your password. You can include the password after the –pass option (example: –pass=my_password), but this is less secure and you will get the following error:

Warning: Using a password on the command line interface can be insecure.

Here is some information about the options that were used:

--all-databases - this dumps all of the tables in all of the databases

--user - The MySQL user name you want to use for the backup

--pass - The password for this user. You can leave this blank or include the password value (which is less secure)

--single-transaction - for InnoDB tables

If you are using Global Transaction Identifier’s (GTID’s) with InnoDB (GTID’s aren’t available with MyISAM), you will want to use the –set-gtid-purged=OFF option. Then you would issue this command:

mysqldump --all-databases --single-transaction --set-gtid-purged=OFF --user=root --pass > all_databases.sql

Otherwise you will see this error:

Warning: A partial dump from a server that has GTIDs will by default include the GTIDs of all transactions, even those that changed suppressed parts of the database. If you don't want to restore GTIDs, pass --set-gtid-purged=OFF. To make a complete dump, pass --all-databases --triggers --routines --events.

You can also execute a partial backup of all of your databases. This example will be a partial backup because I am not going to backup the default databases for MySQL (which are created during installation) – mysql, test, PERFORMANCE_SCHEMA and INFORMATION_SCHEMA

Note: mysqldump does not dump the INFORMATION_SCHEMA database by default. To dump INFORMATION_SCHEMA, name it explicitly on the command line and also use the –skip-lock-tables option.

mysqldump never dumps the performance_schema database.

mysqldump also does not dump the MySQL Cluster ndbinfo information database.

Before MySQL 5.6.6, mysqldump does not dump the general_log or slow_query_log tables for dumps of the mysql database. As of 5.6.6, the dump includes statements to recreate those tables so that they are not missing after reloading the dump file. Log table contents are not dumped.

If you encounter problems backing up views due to insufficient privileges, see Section E.5, “Restrictions on Views” for a workaround.

(from: http://dev.mysql.com/doc/refman/5.6/en/mysqldump.html)

To do a partial backup, you will need a list of the databases that you want to backup. You may retrieve a list of all of the databases by simply executing the SHOW DATABASES command from a mysql prompt:

mysql> SHOW DATABASES;

+--------------------+

| Database |

+--------------------+

| information_schema |

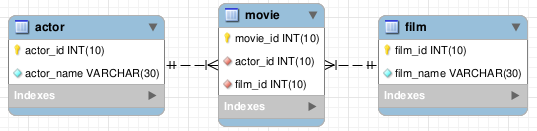

| comicbookdb |

| coupons |

| mysql |

| performance_schema |

| scripts |

| test |

| watchdb |

+--------------------+

8 rows in set (0.00 sec)

In this example, since I don’t want to backup the default mysql databases, I am only going to backup the comicbookdb, coupons, scripts and watchdb databases. I am going to use the following options:

--databases - This allows you to specify the databases that you want to backup. You can also <a href="http://dev.mysql.com/doc/mysql-enterprise-backup/3.6/en/partial.html">specify certain tables</a> that you want to backup. If you want to do a full backup of all of the databases, then leave out this option

--add-drop-database - This will insert a DROP DATABASE statement before each CREATE DATABASE statement. This is useful if you need to import the data to an existing MySQL instance where you want to overwrite the existing data. You can also use this to import your backup onto a new MySQL instance, and it will create the databases and tables for you.

--triggers - this will include the triggers for each dumped table

--routines - this will include the stored routines (procedures and functions) from the dumped databases

--events - this will include any events from the dumped databases

--set-gtid-purged=OFF - since I am using replication on this database (it is the master), I like to include this in case I want to create a new slave using the data that I have dumped. This option enables control over global transaction identifiers (GTID) information written to the dump file, by indicating whether to add a SET @@global.gtid_purged statement to the output.

--user - The MySQL user name you want to use

--pass - Again, you can add the actual value of the password (ex. --pass=mypassword), but it is less secure than typing in the password manually. This is useful for when you want to put the backup in a script, in cron or in Windows Task Scheduler.

--single-transaction - Since I am using InnoDB tables, I will want to use this option.

Here is the command that I will run from a prompt:

mysqldump --databases comicbookdb coupons scripts watchdb --single-transaction --set-gtid-purged=OFF --add-drop-database --triggers --routines --events --user=root --pass > partial_database_backup.sql

I will need to enter my password on the command line. After the backup has completed, if your backup file isn’t too large, you can open it and see the actual SQL statements that will be used if you decide that you need to recreate the database(s). If you accidentally dump all of the databases into one file, and you want to separate the dump file into smaller files, see my post on using Perl to split the dump file.

For example, here is the section of the dump file (partial_database_backup.db) for the comicbookdb database (without the table definitions). (I omitted the headers from the dump file.)

--

-- Current Database: `comicbookdb`

--

/*!40000 DROP DATABASE IF EXISTS `comicbookdb`*/;

CREATE DATABASE /*!32312 IF NOT EXISTS*/ `comicbookdb` /*!40100 DEFAULT CHARACTER SET latin1 */;

USE `comicbookdb`;

--

-- Table structure for table `comics`

--

DROP TABLE IF EXISTS `comics`;

/*!40101 SET @saved_cs_client = @@character_set_client */;

/*!40101 SET character_set_client = utf8 */;

CREATE TABLE `comics` (

`serial_id` int(7) NOT NULL AUTO_INCREMENT,

`date_time_added` datetime NOT NULL,

`publisher_id` int(6) NOT NULL,

....

If you are using the dump file to create a slave server, you can use the –master-data option, which includes the CHANGE MASTER information, which looks like this:

--

-- Position to start replication or point-in-time recovery from

--

CHANGE MASTER TO MASTER_LOG_FILE='mysql-bin.000013', MASTER_LOG_POS=79338;

If you used the –set-gtid-purged=OFF option, you would see the value of the Global Transaction Identifier’s (GTID’s):

--

--GTID state at the beginning of the backup

--

SET @@GLOBAL.GTID_PURGED='82F20158-5A16-11E2-88F9-C4A801092ABB:1-168523';

You may also test your backup without exporting any data by using the –no-data option. This will show you all of the information for creating the databases and tables, but it will not export any data. This is also useful for recreating a blank database on the same or on another server.

When you export your data, mysqldump will create INSERT INTO statements to import the data into the tables. However, the default is for the INSERT INTO statements to contain multiple-row INSERT syntax that includes several VALUES lists. This allows for a quicker import of the data. But, if you think that your data might be corrupt, and you want to be able to isolate a given row of data – or if you simply want to have one INSERT INTO statement per row of data, then you can use the –skip-extended-insert option. If you use the –skip-extended-insert option, importing the data will take much longer to complete, and the backup file size will be larger.

Importing and restoring the data is easy. To import the backup file into a new, blank instance of MySQL, you can simply use the mysql command to import the data:

mysql -uroot -p < partial_database_backup.sql

Again, you will need to enter your password or you can include the value after the -p option (less secure).

There are many more options that you can use with a href=”http://dev.mysql.com/doc/refman/5.6/en/mysqldump.html”>mysqldump. The main thing to remember is that you should backup your data on a regular basis, and move a copy of the backup file off the MySQL server.

Finally, here is a Perl script that I use in cron to backup my databases. This script allows you to specify which databases you want to backup via the mysql_bak.config file. This config file is simply a list of the databases that you want to backup, with an option to ignore any databases that are commented out with a #. This isn’t a secure script, as you have to embed the MySQL user password in the script.

#!/usr/bin/perl

# Perform a mysqldump on all the databases specified in the dbbackup.config file

use warnings;

use File::Basename;

# set the directory where you will keep the backup files

$backup_folder = '/Users/tonydarnell/mysqlbak';

# the config file is a text file with a list of the databases to backup

# this should be in the same location as this script, but you can modify this

# if you want to put the file somewhere else

my $config_file = dirname($0) . "/mysql_bak.config";

# example config file

# You may use a comment to bypass any database that you don't want to backup

# # Unwanted_DB (commented - will not be backed up)

# twtr

# cbgc

# retrieve a list of the databases from the config file

my @databases = removeComments(getFileContents($config_file));

# change to the directory of the backup files.

chdir($backup_folder) or die("Cannot go to folder '$backup_folder'");

# grab the local time variables

my ($sec,$min,$hour,$mday,$mon,$year,$wday,$yday,$isdst) = localtime(time);

$year += 1900;

$mon++;

#Zero padding

$mday = '0'.$mday if ($mday<10);

$mon = '0'.$mon if ($mon<10);

$hour = "0$hour" if $hour < 10;

$min = "0$min" if $min $folder/$file.Z`;

print "Done\n";

}

print "Done\n\n";

# this subroutine simply creates an array of the list of the databases

sub getFileContents {

my $file = shift;

open (FILE,$file) || die("Can't open '$file': $!");

my @lines=;

close(FILE);

return @lines;

}

# remove any commented tables from the @lines array

sub removeComments {

my @lines = @_;

@cleaned = grep(!/^\s*#/, @lines); #Remove Comments

@cleaned = grep(!/^\s*$/, @cleaned); #Remove Empty lines

return @cleaned;

}

![]() |

Tony Darnell is a Principal Sales Consultant for MySQL, a division of Oracle, Inc. MySQL is the world’s most popular open-source database program. Tony may be reached at info [at] ScriptingMySQL.com and on LinkedIn.

|

PlanetMySQL Voting:

Vote UP /

Vote DOWN

Vote UP /

Vote UP /

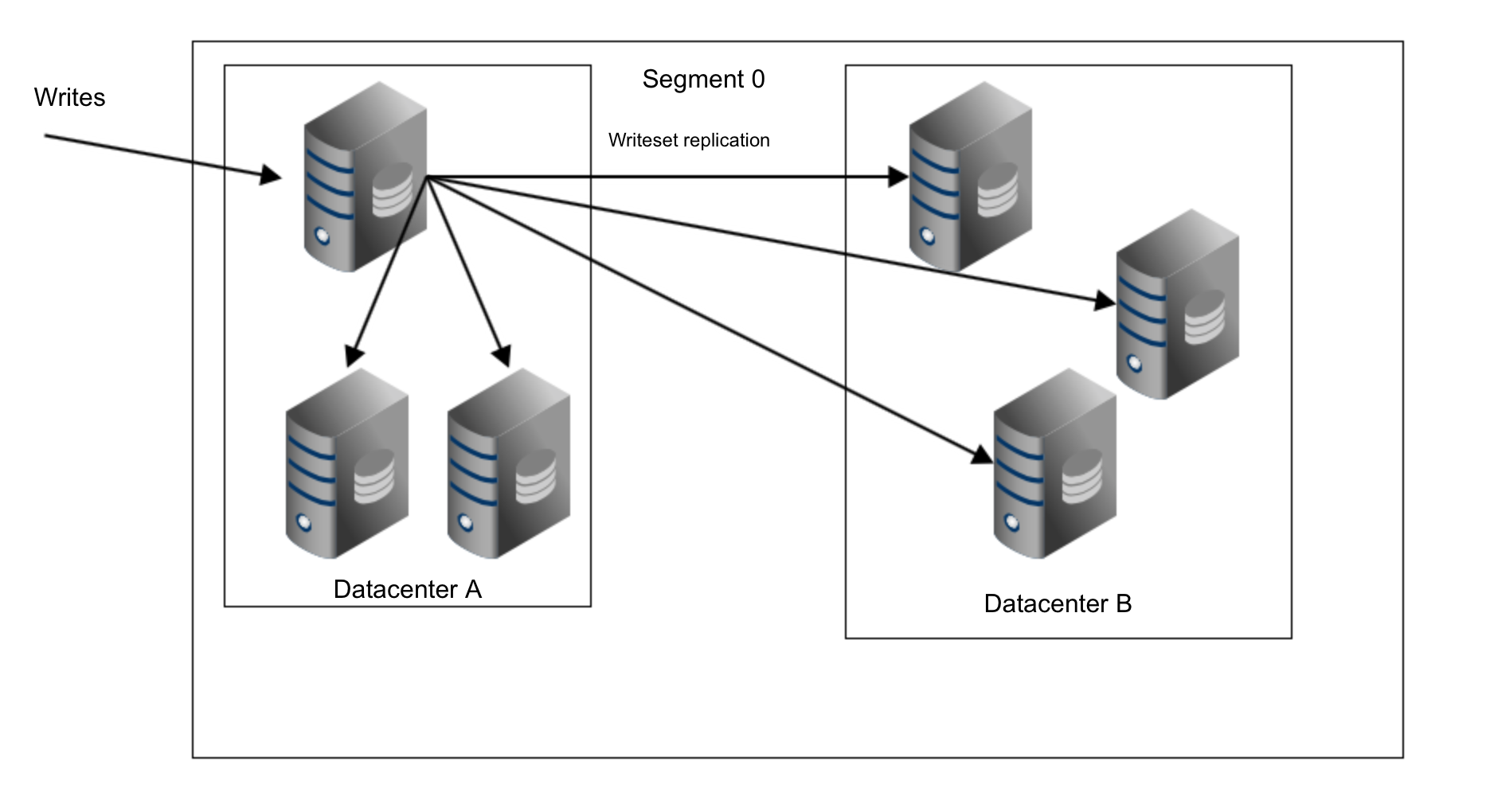

The MariaDB project is pleased to announce the immediate availability of MariaDB Galera Cluster 10.0.19 and 5.5.43. These are Stable (GA) releases.

The MariaDB project is pleased to announce the immediate availability of MariaDB Galera Cluster 10.0.19 and 5.5.43. These are Stable (GA) releases.