Laravel 5.7 CRUD Example Tutorial For Beginners From Scratch is today’s leading topic. Laravel 5.7 has some new cool features as well as several other enhancement and bug fixes. In previous Laracon event, Taylor Otwell announced some of the notable changes which are the following.

- Resources Directory Changes.

- Callable Action URLs.

- Laravel Dump Server.

- Improved Error Messages For Dynamic Calls.

Now in this tutorial, first we will install the Laravel 5.7 and then build a CRUD application.

Laravel 5.7 CRUD Example Tutorial

First, let us install Laravel 5.7 using the following command. We will use Composer Create-Project to generate laravel 5.7 projects.

#1: Install Laravel 5.7

Type the following command. Make sure you have installed composer in your machine.

composer create-project --prefer-dist laravel/laravel stocks

Okay, now go inside the folder and install the npm packages using the following command. The requirement for the below command is that node.js is installed on your machine. So, if you have not installed, then please install it using its official site.

npm install

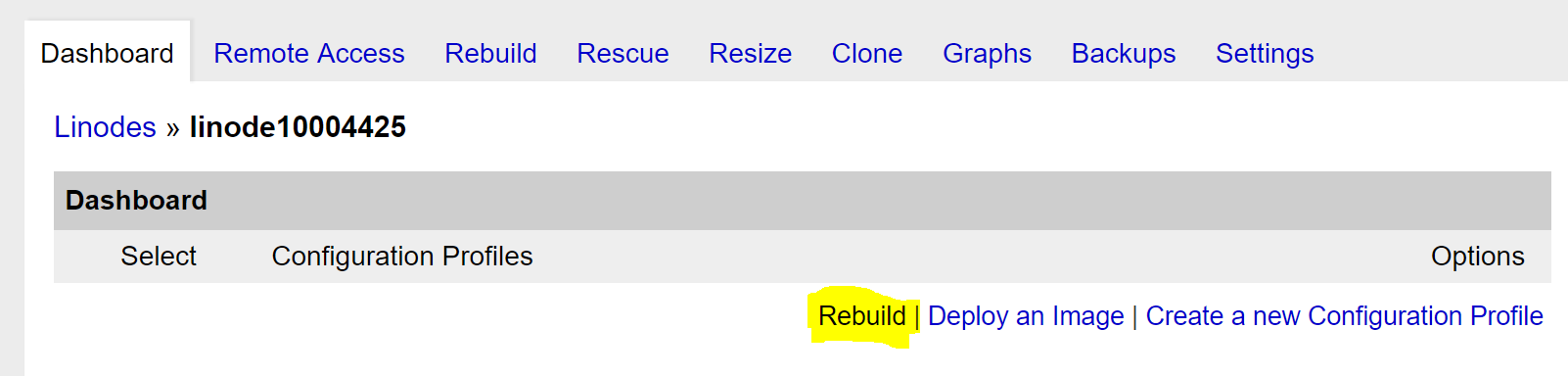

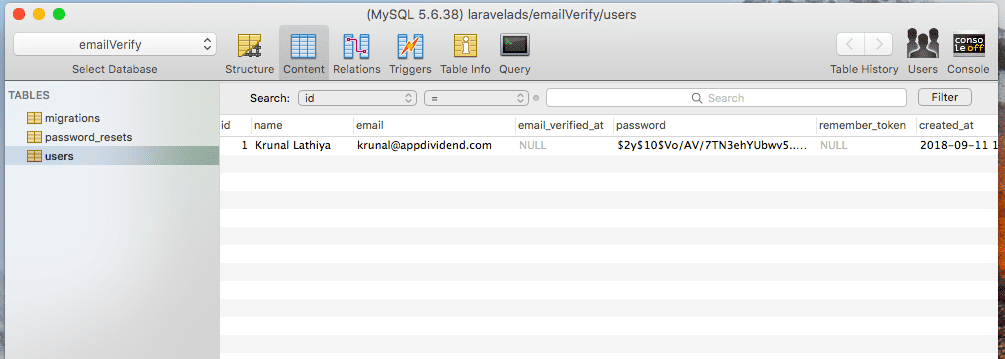

#2: Configure MySQL Database

Now, first, in MySQL, you need to create the database, and then we need to connect that database to the Laravel application. You can also use phpmyadmin to create the database.

Now, After creating the database, we need to open the .env file inside Laravel stocks project and add the database credentials. I have typed my credentials; please enter yours otherwise it won’t connect.

DB_CONNECTION=mysql DB_HOST=127.0.0.1 DB_PORT=3306 DB_DATABASE=laravel57 DB_USERNAME=root DB_PASSWORD=root

So now you will be able to connect the MySQL database.

Laravel always ships with migration files, so you can able to generate the tables in the database using the following command.

php artisan migrate

#3: Create a model and migration file.

Go to the terminal and type the following command to generate the model and migration file.

php artisan make:model Share -m

It will create the model and migration file. Now, we will write the schema inside <timestamp>create_shares_table.php file.

/**

* Run the migrations.

*

* @return void

*/

public function up()

{

Schema::create('shares', function (Blueprint $table) {

$table->increments('id');

$table->string('share_name');

$table->integer('share_price');

$table->integer('share_qty');

$table->timestamps();

});

}

Okay now migrate the table using the following command.

php artisan migrate

Now, add the fillable property inside Share.php file.

<?php

namespace App;

use Illuminate\Database\Eloquent\Model;

class Share extends Model

{

protected $fillable = [

'share_name',

'share_price',

'share_qty'

];

}

#4: Create routes and controller

First, create the ShareController using the following command.

php artisan make:controller ShareController --resource

Now, inside routes >> web.php file, add the following line of code.

<?php

Route::get('/', function () {

return view('welcome');

});

Route::resource('shares', 'ShareController');

Actually, by adding the following line, we have registered the multiple routes for our application. We can check it using the following command.

php artisan route:list

Okay, now open the ShareController.php file, and you can see that all the functions declarations are there.

<?php

namespace App\Http\Controllers;

use Illuminate\Http\Request;

class ShareController extends Controller

{

/**

* Display a listing of the resource.

*

* @return \Illuminate\Http\Response

*/

public function index()

{

//

}

/**

* Show the form for creating a new resource.

*

* @return \Illuminate\Http\Response

*/

public function create()

{

//

}

/**

* Store a newly created resource in storage.

*

* @param \Illuminate\Http\Request $request

* @return \Illuminate\Http\Response

*/

public function store(Request $request)

{

//

}

/**

* Display the specified resource.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function show($id)

{

//

}

/**

* Show the form for editing the specified resource.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function edit($id)

{

//

}

/**

* Update the specified resource in storage.

*

* @param \Illuminate\Http\Request $request

* @param int $id

* @return \Illuminate\Http\Response

*/

public function update(Request $request, $id)

{

//

}

/**

* Remove the specified resource from storage.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function destroy($id)

{

//

}

}

#5: Create the views

Inside resources >> views folder, create one folder called shares.

Inside that folder, create the following three files.

- create.blade.php

- edit.blade.php

- index.blade.php

But inside views folder, we also need to create a layout file. So create one file inside the views folder called layout.blade.php. Add the following code inside the layout.blade.php file.

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<meta http-equiv="X-UA-Compatible" content="ie=edge">

<title>Laravel 5.7 CRUD Example Tutorial</title>

<link href="{{ asset('css/app.css') }}" rel="stylesheet" type="text/css" />

</head>

<body>

<div class="container">

@yield('content')

</div>

<script src="{{ asset('js/app.js') }}" type="text/js"></script>

</body>

</html>

So basically this file is our main template file, and all the other view files will extend this file. Here, we have already included the bootstrap four by adding the app.css.

Next step would be to code the create.blade.php file. So write the following code inside it.

@extends('layout')

@section('content')

<style>

.uper {

margin-top: 40px;

}

</style>

<div class="card uper">

<div class="card-header">

Add Share

</div>

<div class="card-body">

@if ($errors->any())

<div class="alert alert-danger">

<ul>

@foreach ($errors->all() as $error)

<li>{{ $error }}</li>

@endforeach

</ul>

</div><br />

@endif

<form method="post" action="{{ route('shares.store') }}">

<div class="form-group">

@csrf

<label for="name">Share Name:</label>

<input type="text" class="form-control" name="share_name"/>

</div>

<div class="form-group">

<label for="price">Share Price :</label>

<input type="text" class="form-control" name="share_price"/>

</div>

<div class="form-group">

<label for="quantity">Share Quantity:</label>

<input type="text" class="form-control" name="share_qty"/>

</div>

<button type="submit" class="btn btn-primary">Add</button>

</form>

</div>

</div>

@endsection

Okay, now we need to open the ShareController.php file, and on the create function, we need to return a view, and that is the create.blade.php file.

// ShareController.php

public function create()

{

return view('shares.create');

}

Save the file and start the Laravel development server using the following command.

php artisan serve

Go to the http://localhost:8000/shares/create.

You can see something like this.

#6: Save the data

Now, we need to code the store function to save the data in the database. First, include the Share.php model inside ShareController.php file.

<?php

namespace App\Http\Controllers;

use Illuminate\Http\Request;

use App\Share;

class ShareController extends Controller

{

/**

* Display a listing of the resource.

*

* @return \Illuminate\Http\Response

*/

public function index()

{

//

}

/**

* Show the form for creating a new resource.

*

* @return \Illuminate\Http\Response

*/

public function create()

{

return view('shares.create');

}

/**

* Store a newly created resource in storage.

*

* @param \Illuminate\Http\Request $request

* @return \Illuminate\Http\Response

*/

public function store(Request $request)

{

$request->validate([

'share_name'=>'required',

'share_price'=> 'required|integer',

'share_qty' => 'required|integer'

]);

$share = new Share([

'share_name' => $request->get('share_name'),

'share_price'=> $request->get('share_price'),

'share_qty'=> $request->get('share_qty')

]);

$share->save();

return redirect('/shares')->with('success', 'Stock has been added');

}

/**

* Display the specified resource.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function show($id)

{

//

}

/**

* Show the form for editing the specified resource.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function edit($id)

{

//

}

/**

* Update the specified resource in storage.

*

* @param \Illuminate\Http\Request $request

* @param int $id

* @return \Illuminate\Http\Response

*/

public function update(Request $request, $id)

{

//

}

/**

* Remove the specified resource from storage.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function destroy($id)

{

//

}

}

If the validation fails, then it will throw an error, and we will display inside the create.blade.php file.

If all the values are good and pass the validation, then it will save the values in the database.

#7: Display the data.

Okay, now open the file called index.blade.php file and add the following code.

@extends('layout')

@section('content')

<style>

.uper {

margin-top: 40px;

}

</style>

<div class="uper">

@if(session()->get('success'))

<div class="alert alert-success">

{{ session()->get('success') }}

</div><br />

@endif

<table class="table table-striped">

<thead>

<tr>

<td>ID</td>

<td>Stock Name</td>

<td>Stock Price</td>

<td>Stock Quantity</td>

<td colspan="2">Action</td>

</tr>

</thead>

<tbody>

@foreach($shares as $share)

<tr>

<td>{{$share->id}}</td>

<td>{{$share->share_name}}</td>

<td>{{$share->share_price}}</td>

<td>{{$share->share_qty}}</td>

<td><a href="{{ route('shares.edit',$share->id)}}" class="btn btn-primary">Edit</a></td>

<td>

<form action="{{ route('shares.destroy', $share->id)}}" method="post">

@csrf

@method('DELETE')

<button class="btn btn-danger" type="submit">Delete</button>

</form>

</td>

</tr>

@endforeach

</tbody>

</table>

<div>

@endsection

Next thing is we need to code the index() function inside ShareController.php file.

<?php

namespace App\Http\Controllers;

use Illuminate\Http\Request;

use App\Share;

class ShareController extends Controller

{

/**

* Display a listing of the resource.

*

* @return \Illuminate\Http\Response

*/

public function index()

{

$shares = Share::all();

return view('shares.index', compact('shares'));

}

/**

* Show the form for creating a new resource.

*

* @return \Illuminate\Http\Response

*/

public function create()

{

return view('shares.create');

}

/**

* Store a newly created resource in storage.

*

* @param \Illuminate\Http\Request $request

* @return \Illuminate\Http\Response

*/

public function store(Request $request)

{

$request->validate([

'share_name'=>'required',

'share_price'=> 'required|integer',

'share_qty' => 'required|integer'

]);

$share = new Share([

'share_name' => $request->get('share_name'),

'share_price'=> $request->get('share_price'),

'share_qty'=> $request->get('share_qty')

]);

$share->save();

return redirect('/shares')->with('success', 'Stock has been added');

}

/**

* Display the specified resource.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function show($id)

{

//

}

/**

* Show the form for editing the specified resource.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function edit($id)

{

//

}

/**

* Update the specified resource in storage.

*

* @param \Illuminate\Http\Request $request

* @param int $id

* @return \Illuminate\Http\Response

*/

public function update(Request $request, $id)

{

//

}

/**

* Remove the specified resource from storage.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function destroy($id)

{

//

}

}

#8: Edit and Update Data

First, we need to code the edit() function inside ShareController.php file.

<?php

namespace App\Http\Controllers;

use Illuminate\Http\Request;

use App\Share;

class ShareController extends Controller

{

/**

* Display a listing of the resource.

*

* @return \Illuminate\Http\Response

*/

public function index()

{

$shares = Share::all();

return view('shares.index', compact('shares'));

}

/**

* Show the form for creating a new resource.

*

* @return \Illuminate\Http\Response

*/

public function create()

{

return view('shares.create');

}

/**

* Store a newly created resource in storage.

*

* @param \Illuminate\Http\Request $request

* @return \Illuminate\Http\Response

*/

public function store(Request $request)

{

$request->validate([

'share_name'=>'required',

'share_price'=> 'required|integer',

'share_qty' => 'required|integer'

]);

$share = new Share([

'share_name' => $request->get('share_name'),

'share_price'=> $request->get('share_price'),

'share_qty'=> $request->get('share_qty')

]);

$share->save();

return redirect('/shares')->with('success', 'Stock has been added');

}

/**

* Display the specified resource.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function show($id)

{

//

}

/**

* Show the form for editing the specified resource.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function edit($id)

{

$share = Share::find($id);

return view('shares.edit', compact('share'));

}

/**

* Update the specified resource in storage.

*

* @param \Illuminate\Http\Request $request

* @param int $id

* @return \Illuminate\Http\Response

*/

public function update(Request $request, $id)

{

//

}

/**

* Remove the specified resource from storage.

*

* @param int $id

* @return \Illuminate\Http\Response

*/

public function destroy($id)

{

//

}

}

Now, add the following lines of code inside the edit.blade.php file.

@extends('layout')

@section('content')

<style>

.uper {

margin-top: 40px;

}

</style>

<div class="card uper">

<div class="card-header">

Edit Share

</div>

<div class="card-body">

@if ($errors->any())

<div class="alert alert-danger">

<ul>

@foreach ($errors->all() as $error)

<li>{{ $error }}</li>

@endforeach

</ul>

</div><br />

@endif

<form method="post" action="{{ route('shares.update', $share->id) }}">

@method('PATCH')

@csrf

<div class="form-group">

<label for="name">Share Name:</label>

<input type="text" class="form-control" name="share_name" value={{ $share->share_name }} />

</div>

<div class="form-group">

<label for="price">Share Price :</label>

<input type="text" class="form-control" name="share_price" value={{ $share->share_price }} />

</div>

<div class="form-group">

<label for="quantity">Share Quantity:</label>

<input type="text" class="form-control" name="share_qty" value={{ $share->share_qty }} />

</div>

<button type="submit" class="btn btn-primary">Update</button>

</form>

</div>

</div>

@endsection

Finally, code the update function inside ShareController.php file.

public function update(Request $request, $id)

{

$request->validate([

'share_name'=>'required',

'share_price'=> 'required|integer',

'share_qty' => 'required|integer'

]);

$share = Share::find($id);

$share->share_name = $request->get('share_name');

$share->share_price = $request->get('share_price');

$share->share_qty = $request->get('share_qty');

$share->save();

return redirect('/shares')->with('success', 'Stock has been updated');

}

So, now you can update the existing values.

#9: Delete the data

Just code the delete function inside ShareController.php file.

public function destroy($id)

{

$share = Share::find($id);

$share->delete();

return redirect('/shares')->with('success', 'Stock has been deleted Successfully');

}

Finally, Laravel 5.7 CRUD Example Tutorial For Beginners From Scratch is over. I have put the code in the Github Repo. So check out as well.

The post Laravel 5.7 CRUD Example Tutorial For Beginners From Scratch appeared first on AppDividend.

It’s 2018. Maybe now is the time to start migrating your network to

It’s 2018. Maybe now is the time to start migrating your network to