One of the biggest and undead myths in SQL is that COUNT(*) is faster than COUNT(1). Or was it that COUNT(1) is faster than COUNT(*)? Impossible to remember, because there’s really no reason at all why one should be faster than the other. But is the myth justified?

Let’s measure!

How does COUNT(…) work?

But first, let’s look into some theory. The two ways to count things are not exactly the same thing. Why?

-

COUNT(*) counts all the tuples in a group

-

COUNT(<expr>) counts all the tuples in a group for which <expr> evaluates to something that IS NOT NULL

This distinction can be quite useful. Most of the time, we’ll simply COUNT(*) for convenience, but there are (at least) two cases where we don’t want that, for example:

When outer joining

Imagine that in the Sakila database, we have some actors that did not play in any films. Making sure such an actor actually exists:

INSERT INTO actor (actor_id, first_name, last_name)

VALUES (201, 'SUSAN', 'DAVIS');

When inner joining, we might write the following (using PostgreSQL syntax):

SELECT actor_id, a.first_name, a.last_name, count(*) AS c

FROM actor AS a

JOIN film_actor AS fa USING (actor_id)

JOIN film AS f USING (film_id)

GROUP BY actor_id

ORDER BY c ASC, actor_id ASC;

And we won’t get the newly added SUSAN DAVIS, because of the nature of inner join:

actor_id|first_name |last_name |c |

--------|-----------|------------|--|

148|EMILY |DEE |14|

35|JUDY |DEAN |15|

199|JULIA |FAWCETT |15|

186|JULIA |ZELLWEGER |16|

31|SISSY |SOBIESKI |18|

71|ADAM |GRANT |18|

1|PENELOPE |GUINESS |19|

30|SANDRA |PECK |19|

So we might change our query to use LEFT JOIN instead

SELECT actor_id, a.first_name, a.last_name, count(*) AS c

FROM actor AS a

LEFT JOIN film_actor AS fa USING (actor_id)

LEFT JOIN film AS f USING (film_id)

GROUP BY actor_id

ORDER BY c ASC, actor_id ASC;

There she is now, but oops, wrong count! She doesn’t have any films, which we have proven before with the INNER JOIN query. Yet we get 1:

actor_id|first_name |last_name |c |

--------|-----------|------------|--|

201|SUSAN |DAVIS | 1|

148|EMILY |DEE |14|

35|JUDY |DEAN |15|

199|JULIA |FAWCETT |15|

186|JULIA |ZELLWEGER |16|

31|SISSY |SOBIESKI |18|

71|ADAM |GRANT |18|

1|PENELOPE |GUINESS |19|

30|SANDRA |PECK |19|

Her COUNT(*) value is 1, because we do get 1 film tuple for her in the group, with all columns being NULL. The solution is to count the FILM_ID instead, which cannot be NULL in the table (being a primary key), but only because of the LEFT JOIN:

SELECT actor_id, a.first_name, a.last_name, count(film_id) AS c

FROM actor AS a

LEFT JOIN film_actor AS fa USING (actor_id)

LEFT JOIN film AS f USING (film_id)

GROUP BY actor_id

ORDER BY c ASC, actor_id ASC;

Notice, we could count other things than the primary key, but with the primary key, we’re quite certain we don’t get any other “accidental” nulls in our groups, which we did not want to exclude from the count value.

Now, we’re getting the correct result:

actor_id|first_name |last_name |c |

--------|-----------|------------|--|

201|SUSAN |DAVIS | 0|

148|EMILY |DEE |14|

35|JUDY |DEAN |15|

199|JULIA |FAWCETT |15|

186|JULIA |ZELLWEGER |16|

31|SISSY |SOBIESKI |18|

71|ADAM |GRANT |18|

1|PENELOPE |GUINESS |19|

30|SANDRA |PECK |19|

When counting subsets of a group

An even more powerful application of counting only non-null evaluations of an expression is counting only subsets of a group. We’ve already blogged about this technique in our previous post about aggregating several expressions in one single query.

For example, counting in a single query:

- All actors

- Actors with their first_name starting with A

- Actors with their first_name ending with A

- Actors with their first_name containing A

In SQL:

SELECT

count(*),

count(CASE WHEN first_name LIKE 'A%' THEN 1 END),

count(CASE WHEN first_name LIKE '%A' THEN 1 END),

count(CASE WHEN first_name LIKE '%A%' THEN 1 END)

FROM actor;

This yields:

count|count|count|count|

-----|-----|-----|-----|

201| 13| 30| 105|

This is very useful when pivoting data sets (see also Oracle/SQL Server PIVOT clause).

Notice that PostgreSQL supports the SQL standard FILTER clause for this, which is more convenient and more readable. The above query can be written like this, in PostgreSQL:

SELECT

count(*),

count(*) FILTER (WHERE first_name LIKE 'A%'),

count(*) FILTER (WHERE first_name LIKE '%A'),

count(*) FILTER (WHERE first_name LIKE '%A%')

FROM actor;

Back to COUNT(*) vs COUNT(1)

Now that we now the theory behind these COUNT expressions, what’s the difference between COUNT(*) and COUNT(1). There is none, effectively. COUNT(1) evaluates a constant expression for each row in the group, and it can be proven that this constant expression will never evaluate to NULL, so effectively, we’re running COUNT(*), counting ALL the rows in the group again.

There should be no difference, and parsers / optimisers should be able to recognise this and not do the extra work of checking every expression evaluation for NULL-ness.

I recently saw this discussion on Twitter, though, where Vik Fearing looked up the PostgreSQL sources, showing that PostgreSQL does do the extra work instead of optimising this:

So, I was curious to see if it mattered. I ran a benchmark on the 4 most popular RDBMS, with these results:

- MySQL: Doesn’t matter. Sometimes

COUNT(1) was faster, sometimes COUNT(*) was faster, so all differences were only benchmark artifacts

- Oracle: Doesn’t matter. Like MySQL

- PostgreSQL: Does matter (!).

COUNT(*) was consistently faster by around 10% on 1M rows, that’s much more than I had expected

- SQL Server: Doesn’t matter. Like MySQL

The benchmark code can be found in the following gists:

The results are below. Each benchmark run repeated SELECT COUNT(*) FROM t or SELECT COUNT(1) FROM t 100 times on a 1M row table, and then the benchmark was repeated 5 times to mitigate any warmup penalties and be fair with respect to caching.

The times displayed are relative to the fastest run. This removes any distraction that may be caused by interpreting actual execution times as we do not want to compare database products against each other.

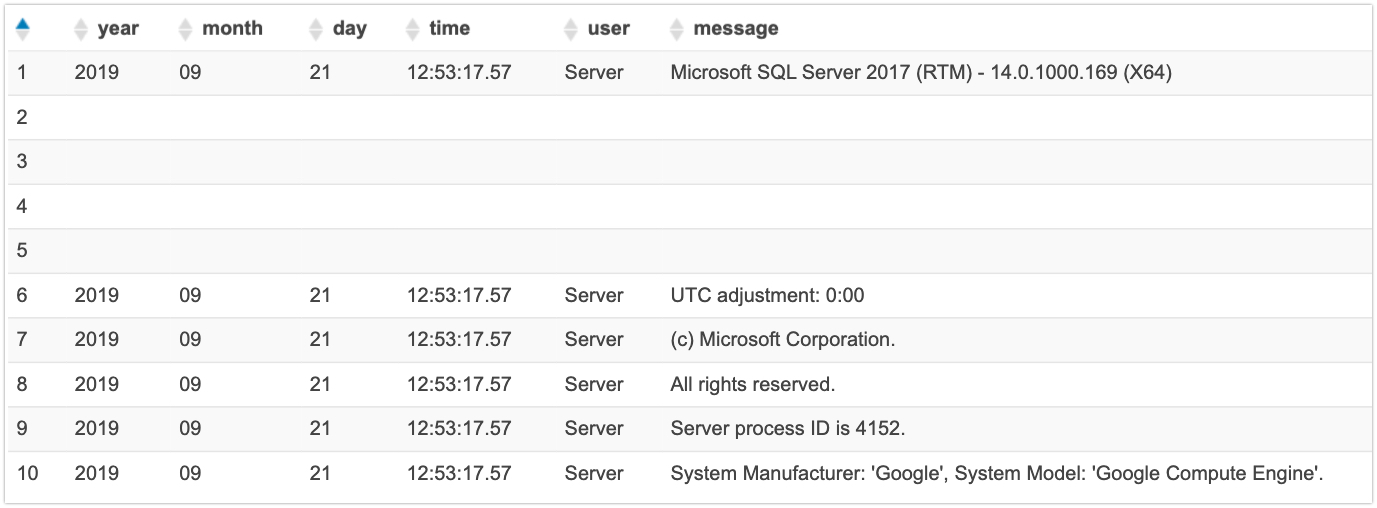

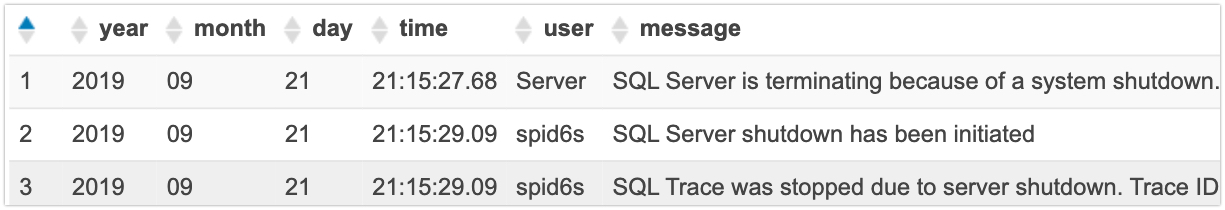

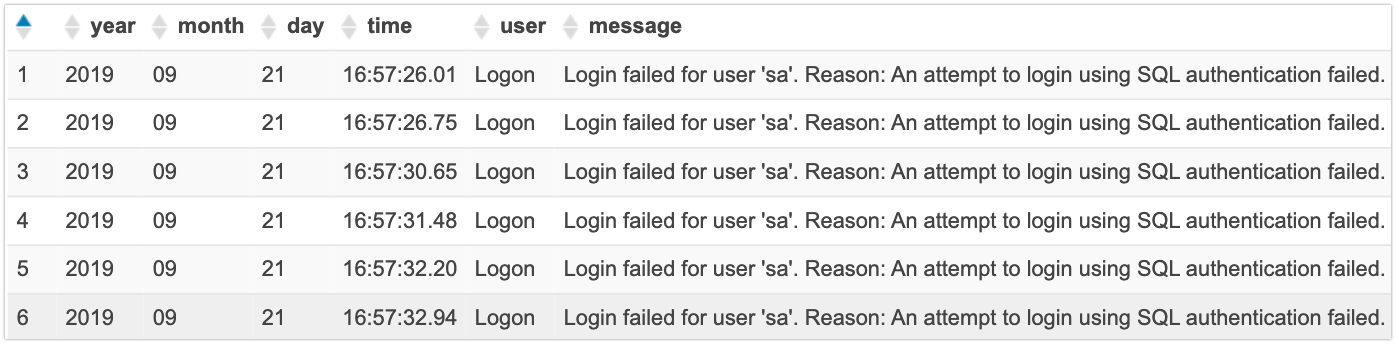

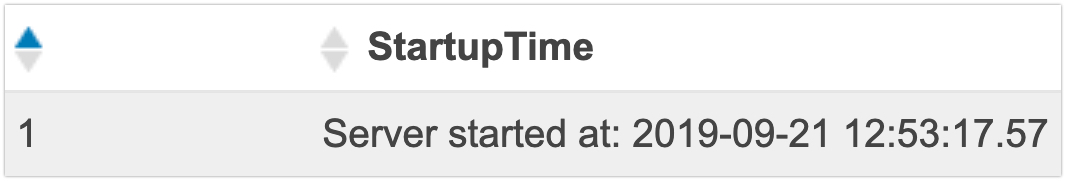

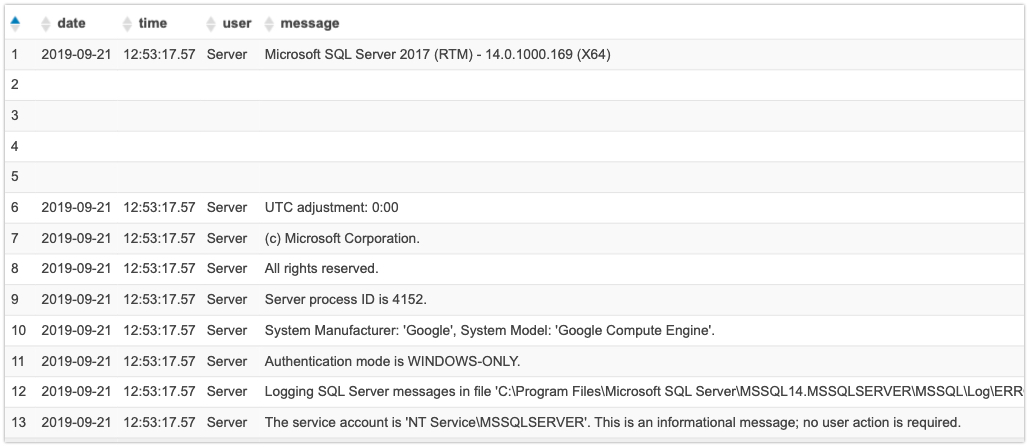

The database versions I’ve used are:

- MySQL 8.0.16 (in Docker)

- Oracle 18c XE (in Docker)

- PostgreSQL 11.3 (in Docker)

- SQL Server 2017 Express (in Windows)

MySQL

No relevant difference, nor a clear winner:

RUN STMT RELATIVE_TIME

-----------------------------

0 1 1.0079

0 2 1.0212

1 1 1.0229

1 2 1.0256

2 1 1.0009

2 2 1.0031

3 1 1.0291

3 2 1.0256

4 1 1.0618

4 2 1.0000

Oracle

No relevant difference, nor a clear winner

Run 1, Statement 1 : 1.06874

Run 1, Statement 2 : 1.01982

Run 2, Statement 1 : 1.09175

Run 2, Statement 2 : 1.0301

Run 3, Statement 1 : 1.00308

Run 3, Statement 2 : 1.02499

Run 4, Statement 1 : 1.02503

Run 4, Statement 2 : 1

Run 5, Statement 1 : 1.01259

Run 5, Statement 2 : 1.05828

PostgreSQL

A significant, consistent difference of almost 10%:

RUN 1, Statement 1: 1.00134

RUN 1, Statement 2: 1.09538

RUN 2, Statement 1: 1.00190

RUN 2, Statement 2: 1.09115

RUN 3, Statement 1: 1.00000

RUN 3, Statement 2: 1.09858

RUN 4, Statement 1: 1.00266

RUN 4, Statement 2: 1.09260

RUN 5, Statement 1: 1.00454

RUN 5, Statement 2: 1.09694

Again, I’m surprised by the order of magnitude of this difference. I would have expected it to be less. Curious to hear about your own results in the comments, or further ideas why this is so significant in PostgreSQL.

SQL Server

No relevant difference, nor a clear winner

Run 1, Statement 1: 1.00442

Run 1, Statement 2: 1.00702

Run 2, Statement 1: 1.00468

Run 2, Statement 2: 1.00000

Run 3, Statement 1: 1.00208

Run 3, Statement 2: 1.00624

Run 4, Statement 1: 1.00780

Run 4, Statement 2: 1.00364

Run 5, Statement 1: 1.00468

Run 5, Statement 2: 1.00702

Conclusion

As it is now in 2019, given the database versions mentioned above, unfortunately, there is a significant difference between COUNT(*) and COUNT(1) in PostgreSQL. Luckily (and this is rare in SQL), all the other dialects don’t care and thus, consistently using COUNT(*), rather than COUNT(1) is a slightly better choice for ALL measured database products from this article.

Do note that the benchmark only tried a very simple query! The results might be different when using joins, unions, or any other SQL constructs, or in other edge cases, e.g. when using COUNT() in HAVING or ORDER BY or with window functions, etc.

In any case, there shouldn’t be any difference, and I’m sure that a future PostgreSQL version will optimise the constant expression in the COUNT(<expr>) aggregate function directly in the parser to avoid the extra work.

For other interesting optimisations that do not depend on the cost model, see this article here.

We are glad to announce the 1.2.0 release of the

We are glad to announce the 1.2.0 release of the