This is the second part of a two-part series blog for Maximizing Database Query Efficiency In MySQL. You can read part one here.

Using Single-Column, Composite, Prefix, and Covering Index

Tables that are frequently receiving high traffic must be properly indexed. It's not only important to index your table, but you also need to determine and analyze what are the types of queries or types of retrieval that you need for the specific table. It is strongly recommended that you analyze what type of queries or retrieval of data you need on a specific table before you decide what indexes are required for the table. Let's go over these types of indexes and how you can use them to maximize your query performance.

Single-Column Index

InnoD table can contain a maximum of 64 secondary indexes. A single-column index (or full-column index) is an index assigned only to a particular column. Creating an index to a particular column that contains distinct values is a good candidate. A good index must have a high cardinality and statistics so the optimizer can choose the right query plan. To view the distribution of indexes, you can check with SHOW INDEXES syntax just like below:

root[test]#> SHOW INDEXES FROM users_account\G

*************************** 1. row ***************************

Table: users_account

Non_unique: 0

Key_name: PRIMARY

Seq_in_index: 1

Column_name: id

Collation: A

Cardinality: 131232

Sub_part: NULL

Packed: NULL

Null:

Index_type: BTREE

Comment:

Index_comment:

*************************** 2. row ***************************

Table: users_account

Non_unique: 1

Key_name: name

Seq_in_index: 1

Column_name: last_name

Collation: A

Cardinality: 8995

Sub_part: NULL

Packed: NULL

Null:

Index_type: BTREE

Comment:

Index_comment:

*************************** 3. row ***************************

Table: users_account

Non_unique: 1

Key_name: name

Seq_in_index: 2

Column_name: first_name

Collation: A

Cardinality: 131232

Sub_part: NULL

Packed: NULL

Null:

Index_type: BTREE

Comment:

Index_comment:

3 rows in set (0.00 sec)You can inspect as well with tables information_schema.index_statistics or mysql.innodb_index_stats.

Compound (Composite) or Multi-Part Indexes

A compound index (commonly called a composite index) is a multi-part index composed of multiple columns. MySQL allows up to 16 columns bounded for a specific composite index. Exceeding the limit returns an error like below:

ERROR 1070 (42000): Too many key parts specified; max 16 parts allowedA composite index provides a boost to your queries, but it requires that you must have a pure understanding on how you are retrieving the data. For example, a table with a DDL of...

CREATE TABLE `user_account` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`last_name` char(30) NOT NULL,

`first_name` char(30) NOT NULL,

`dob` date DEFAULT NULL,

`zip` varchar(10) DEFAULT NULL,

`city` varchar(100) DEFAULT NULL,

`state` varchar(100) DEFAULT NULL,

`country` varchar(50) NOT NULL,

`tel` varchar(16) DEFAULT NULL

PRIMARY KEY (`id`),

KEY `name` (`last_name`,`first_name`)

) ENGINE=InnoDB DEFAULT CHARSET=latin1...which consists of composite index `name`. The composite index improves query performance once these keys are reference as used key parts. For example, see the following:

root[test]#> explain format=json select * from users_account where last_name='Namuag' and first_name='Maximus'\G

*************************** 1. row ***************************

EXPLAIN: {

"query_block": {

"select_id": 1,

"cost_info": {

"query_cost": "1.20"

},

"table": {

"table_name": "users_account",

"access_type": "ref",

"possible_keys": [

"name"

],

"key": "name",

"used_key_parts": [

"last_name",

"first_name"

],

"key_length": "60",

"ref": [

"const",

"const"

],

"rows_examined_per_scan": 1,

"rows_produced_per_join": 1,

"filtered": "100.00",

"cost_info": {

"read_cost": "1.00",

"eval_cost": "0.20",

"prefix_cost": "1.20",

"data_read_per_join": "352"

},

"used_columns": [

"id",

"last_name",

"first_name",

"dob",

"zip",

"city",

"state",

"country",

"tel"

]

}

}

}

1 row in set, 1 warning (0.00 secThe used_key_parts show that the query plan has perfectly selected our desired columns covered in our composite index.

Composite indexing has its limitations as well. Certain conditions in the query cannot take all columns part of the key.

The documentation says, "The optimizer attempts to use additional key parts to determine the interval as long as the comparison operator is =, <=>, or IS NULL. If the operator is >, <, >=, <=, !=, <>, BETWEEN, or LIKE, the optimizer uses it but considers no more key parts. For the following expression, the optimizer uses = from the first comparison. It also uses >= from the second comparison but considers no further key parts and does not use the third comparison for interval construction…". Basically, this means that regardless you have composite index for two columns, a sample query below does not cover both fields:

root[test]#> explain format=json select * from users_account where last_name>='Zu' and first_name='Maximus'\G

*************************** 1. row ***************************

EXPLAIN: {

"query_block": {

"select_id": 1,

"cost_info": {

"query_cost": "34.61"

},

"table": {

"table_name": "users_account",

"access_type": "range",

"possible_keys": [

"name"

],

"key": "name",

"used_key_parts": [

"last_name"

],

"key_length": "60",

"rows_examined_per_scan": 24,

"rows_produced_per_join": 2,

"filtered": "10.00",

"index_condition": "((`test`.`users_account`.`first_name` = 'Maximus') and (`test`.`users_account`.`last_name` >= 'Zu'))",

"cost_info": {

"read_cost": "34.13",

"eval_cost": "0.48",

"prefix_cost": "34.61",

"data_read_per_join": "844"

},

"used_columns": [

"id",

"last_name",

"first_name",

"dob",

"zip",

"city",

"state",

"country",

"tel"

]

}

}

}

1 row in set, 1 warning (0.00 sec)In this case (and if your query is more of ranges instead of constant or reference types) then avoid using composite indexes. It just wastes your memory and buffer and it increases the performance degradation of your queries.

Prefix Indexes

Prefix indexes are indexes which contain columns referenced as an index, but only takes the starting length defined to that column, and that portion (or prefix data) are the only part stored in the buffer. Prefix indexes can help lessen your buffer pool resources and also your disk space as it does not need to take the full-length of the column.What does this mean? Let's take an example. Let's compare the impact between full-length index versus the prefix index.

root[test]#> create index name on users_account(last_name, first_name);

Query OK, 0 rows affected (0.42 sec)

Records: 0 Duplicates: 0 Warnings: 0

root[test]#> \! du -hs /var/lib/mysql/test/users_account.*

12K /var/lib/mysql/test/users_account.frm

36M /var/lib/mysql/test/users_account.ibdWe created a full-length composite index which consumes a total of 36MiB tablespace for users_account table. Let's drop it and then add a prefix index.

root[test]#> drop index name on users_account;

Query OK, 0 rows affected (0.01 sec)

Records: 0 Duplicates: 0 Warnings: 0

root[test]#> alter table users_account engine=innodb;

Query OK, 0 rows affected (0.63 sec)

Records: 0 Duplicates: 0 Warnings: 0

root[test]#> \! du -hs /var/lib/mysql/test/users_account.*

12K /var/lib/mysql/test/users_account.frm

24M /var/lib/mysql/test/users_account.ibd

root[test]#> create index name on users_account(last_name(5), first_name(5));

Query OK, 0 rows affected (0.42 sec)

Records: 0 Duplicates: 0 Warnings: 0

root[test]#> \! du -hs /var/lib/mysql/test/users_account.*

12K /var/lib/mysql/test/users_account.frm

28M /var/lib/mysql/test/users_account.ibdUsing the prefix index, it holds up only to 28MiB and that's less than 8MiB than using full-length index. That's great to hear, but it doesn't mean that is performant and serves what you need.

If you decide to add a prefix index, you must identify first what type of query for data retrieval you need. Creating a prefix index helps you utilize more efficiency with the buffer pool and so it does help with your query performance but you also need to know its limitation. For example, let's compare the performance when using a full-length index and a prefix index.

Let's create a full-length index using a composite index,

root[test]#> create index name on users_account(last_name, first_name);

Query OK, 0 rows affected (0.45 sec)

Records: 0 Duplicates: 0 Warnings: 0

root[test]#> EXPLAIN format=json select last_name from users_account where last_name='Namuag' and first_name='Maximus Aleksandre' \G

*************************** 1. row ***************************

EXPLAIN: {

"query_block": {

"select_id": 1,

"cost_info": {

"query_cost": "1.61"

},

"table": {

"table_name": "users_account",

"access_type": "ref",

"possible_keys": [

"name"

],

"key": "name",

"used_key_parts": [

"last_name",

"first_name"

],

"key_length": "60",

"ref": [

"const",

"const"

],

"rows_examined_per_scan": 3,

"rows_produced_per_join": 3,

"filtered": "100.00",

"using_index": true,

"cost_info": {

"read_cost": "1.02",

"eval_cost": "0.60",

"prefix_cost": "1.62",

"data_read_per_join": "1K"

},

"used_columns": [

"last_name",

"first_name"

]

}

}

}

1 row in set, 1 warning (0.00 sec)

root[test]#> flush status;

Query OK, 0 rows affected (0.02 sec)

root[test]#> pager cat -> /dev/null; select last_name from users_account where last_name='Namuag' and first_name='Maximus Aleksandre' \G

PAGER set to 'cat -> /dev/null'

3 rows in set (0.00 sec)

root[test]#> nopager; show status like 'Handler_read%';

PAGER set to stdout

+-----------------------+-------+

| Variable_name | Value |

+-----------------------+-------+

| Handler_read_first | 0 |

| Handler_read_key | 1 |

| Handler_read_last | 0 |

| Handler_read_next | 3 |

| Handler_read_prev | 0 |

| Handler_read_rnd | 0 |

| Handler_read_rnd_next | 0 |

+-----------------------+-------+

7 rows in set (0.00 sec)The result reveals that it's, in fact, using a covering index i.e "using_index": true and uses indexes properly, i.e. Handler_read_key is incremented and does an index scan as Handler_read_next is incremented.

Now, let's try using prefix index of the same approach,

root[test]#> create index name on users_account(last_name(5), first_name(5));

Query OK, 0 rows affected (0.22 sec)

Records: 0 Duplicates: 0 Warnings: 0

root[test]#> EXPLAIN format=json select last_name from users_account where last_name='Namuag' and first_name='Maximus Aleksandre' \G

*************************** 1. row ***************************

EXPLAIN: {

"query_block": {

"select_id": 1,

"cost_info": {

"query_cost": "3.60"

},

"table": {

"table_name": "users_account",

"access_type": "ref",

"possible_keys": [

"name"

],

"key": "name",

"used_key_parts": [

"last_name",

"first_name"

],

"key_length": "10",

"ref": [

"const",

"const"

],

"rows_examined_per_scan": 3,

"rows_produced_per_join": 3,

"filtered": "100.00",

"cost_info": {

"read_cost": "3.00",

"eval_cost": "0.60",

"prefix_cost": "3.60",

"data_read_per_join": "1K"

},

"used_columns": [

"last_name",

"first_name"

],

"attached_condition": "((`test`.`users_account`.`first_name` = 'Maximus Aleksandre') and (`test`.`users_account`.`last_name` = 'Namuag'))"

}

}

}

1 row in set, 1 warning (0.00 sec)

root[test]#> flush status;

Query OK, 0 rows affected (0.01 sec)

root[test]#> pager cat -> /dev/null; select last_name from users_account where last_name='Namuag' and first_name='Maximus Aleksandre' \G

PAGER set to 'cat -> /dev/null'

3 rows in set (0.00 sec)

root[test]#> nopager; show status like 'Handler_read%';

PAGER set to stdout

+-----------------------+-------+

| Variable_name | Value |

+-----------------------+-------+

| Handler_read_first | 0 |

| Handler_read_key | 1 |

| Handler_read_last | 0 |

| Handler_read_next | 3 |

| Handler_read_prev | 0 |

| Handler_read_rnd | 0 |

| Handler_read_rnd_next | 0 |

+-----------------------+-------+

7 rows in set (0.00 sec)MySQL reveals that it does use index properly but noticeably, there's a cost overhead compared to a full-length index. That's obvious and explainable, since the prefix index does not cover the whole length of the field values. Using a prefix index is not a replacement, nor an alternative, of full-length indexing. It can also create poor results when using the prefix index inappropriately. So you need to determine what type of query and data you need to retrieve.

Covering Indexes

Covering Indexes doesn't require any special syntax in MySQL. A covering index in InnoDB refers to the case when all fields selected in a query are covered by an index. It does not need to do a sequential read over the disk to read the data in the table but only use the data in the index, significantly speeding up the query. For example, our query earlier i.e.

select last_name from users_account where last_name='Namuag' and first_name='Maximus Aleksandre' \GAs mentioned earlier, is a covering index. When you have a very well-planned tables upon storing your data and created index properly, try to make as possible that your queries are designed to leverage covering index so that you'll benefit the result. This can help you maximize the efficiency of your queries and result to a great performance.

Leverage Tools That Offer Advisors or Query Performance Monitoring

Organizations often initially tend to go first on github and find open-source software that can offer great benefits. For simple advisories that helps you optimize your queries, you can leverage the Percona Toolkit. For a MySQL DBA, the Percona Toolkit is like a swiss army knife.

For operations, you need to analyze how you are using your indexes, you can use pt-index-usage.

Pt-query-digest is also available and it can analyze MySQL queries from logs, processlist, and tcpdump. In fact, the most important tool that you have to use for analyzing and inspecting bad queries is pt-query-digest. Use this tool to aggregate similar queries together and report on those that consume the most execution time.

For archiving old records, you can use pt-archiver. Inspecting your database for duplicate indexes, take leverage on pt-duplicate-key-checker. You might also take advantage of pt-deadlock-logger. Although deadlocks is not a cause of an underperforming and inefficient query but a poor implementation, yet it impacts query inefficiency. If you need table maintenance and requires you to add indexes online without affecting the database traffic going to a particular table, then you can use pt-online-schema-change. Alternatively, you can use gh-ost, which is also very useful for schema migrations.

If you are looking for enterprise features, bundled with lots of features from query performance and monitoring, alarms and alerts, dashboards or metrics that helps you optimize your queries, and advisors, ClusterControl may be the tool for you. ClusterControl offers many features that show you Top Queries, Running Queries, and Query Outliers. Checkout this blog MySQL Query Performance Tuning which guides you how to be on par for monitoring your queries with ClusterControl.

Conclusion

As you've arrived at the ending part of our two-series blog. We covered here the factors that cause query degradation and how to resolve it in order to maximize your database queries. We also shared some tools that can benefit you and help solve your problems.

In a high concurrency world, where more and more users->connections->threads are used, contention is a given. But how do we identify the contention point easily?

In a high concurrency world, where more and more users->connections->threads are used, contention is a given. But how do we identify the contention point easily?

In an earlier post, I discussed the

In an earlier post, I discussed the

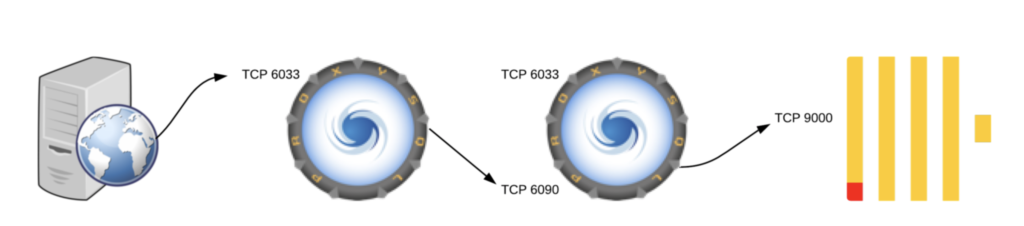

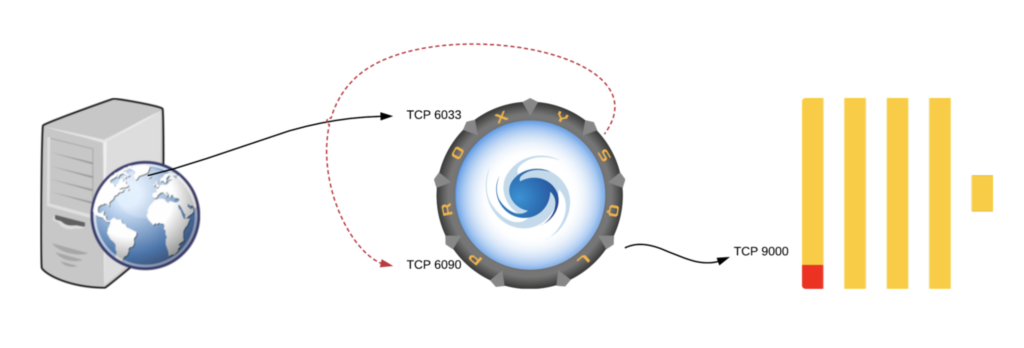

While we’ve had MySQL Group Replication support in ProxySQL since version 1.3 (native as of v1.4), development has continued in subsequent versions. I’d like to describe a scenario of how latency can affect ProxySQL in a MySQL Group Replication environment, and outline a few new features that might help mitigate those issues. Before we dive into the specifics of the discussion, however, let’s take a quick overview of ProxySQL and Group Replication for those who may not be familiar.

While we’ve had MySQL Group Replication support in ProxySQL since version 1.3 (native as of v1.4), development has continued in subsequent versions. I’d like to describe a scenario of how latency can affect ProxySQL in a MySQL Group Replication environment, and outline a few new features that might help mitigate those issues. Before we dive into the specifics of the discussion, however, let’s take a quick overview of ProxySQL and Group Replication for those who may not be familiar.

This blog discusses a few concepts about Docker and how we can use it to run a MySQL async replication environment. Docker is a tool designed to make it easier for developers and sysadmins to create/develop, configure, and run applications with containers. The container allows us to package all parts of the application it needs, such as libraries, dependencies like code, configurations, and runtime engine. Docker runtime containers are platform-independent so the package created can be shipped one platform to another platform.

This blog discusses a few concepts about Docker and how we can use it to run a MySQL async replication environment. Docker is a tool designed to make it easier for developers and sysadmins to create/develop, configure, and run applications with containers. The container allows us to package all parts of the application it needs, such as libraries, dependencies like code, configurations, and runtime engine. Docker runtime containers are platform-independent so the package created can be shipped one platform to another platform.